This year’s ICLR featured a few firsts: the first ICLR in Asia, the first top-3 ML conference (NeurIPS/ICML/ICLR) in Singapore, and the first ICLR with over 10,000 in-person registrations.

Alongside huge attendee growth, there was an increase in paper submissions of around 60% over 2024. Organisers also doubled the number of workshops — from 20 workshops in 2024, all held on one day, to 40 workshops held across two days this year.

ICLR 2025 map

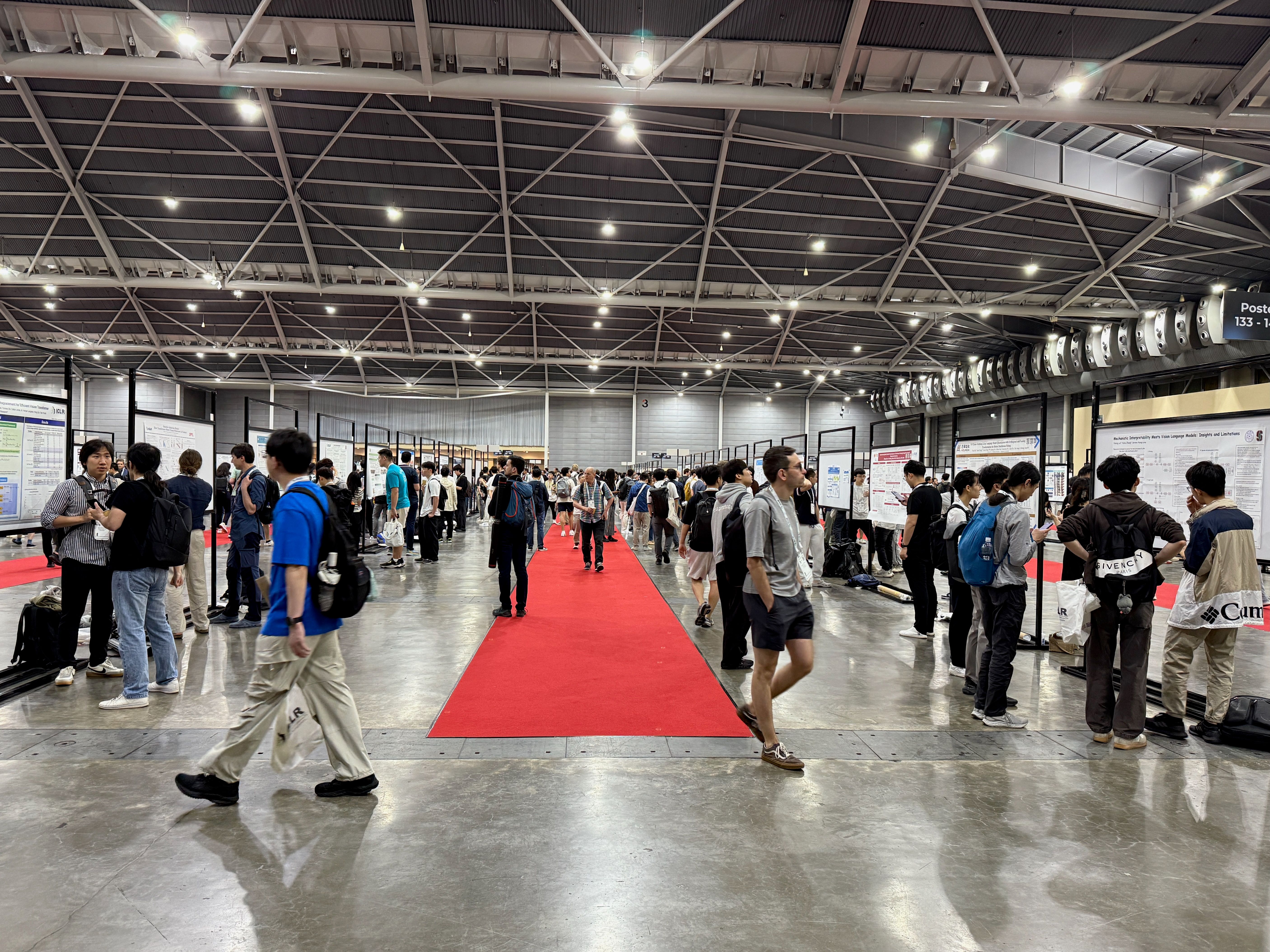

There was an air of excitement throughout the conference. Talks were well-attended, and poster sessions were buzzing with people asking questions and exchanging ideas.

A few highlights to dive into:

- Insightful talks from the winners and runners-up of the Test of Time Awards, with a mix of nostalgia, optimism, and concern.

- Danqi Chen’s talk on the role of academia in training language models.

- Excitement and realism at the first ICLR workshop on Robot Learning.

There were numerous parties throughout the week, sponsored by quant trading firms, VCs, AI research labs, and big tech companies (many of whom were also sponsors and had booths in the exhibit hall).

See you next year!

Next year’s ICLR is scheduled to take place in Brazil, with the host city yet to be announced.

The busiest workshops on the second day were World Models: Understanding, Modelling and Scaling Reasoning and Planning for Large Language Models, and I Can’t Believe It’s Not Better: Challenges in Applied Deep Learning (based on conference app stats).

Tim Rocktäschel (invited talk, Saturday)

The World Models workshop was packed, often with standing room only available. In one talk, Tim Rocktäschel and Jack Parker-Holder continued on from Rocktäschel’s keynote on Google DeepMind’s Genie and Genie 2 earlier in the week.

Jürgen Schmidhuber made a surprise appearance at the afternoon panel. The group discussed the relationship between world models and agents, representations, interestingness, open-endedness, and compression, as well as the importance of real-world interaction for building relevant world models.

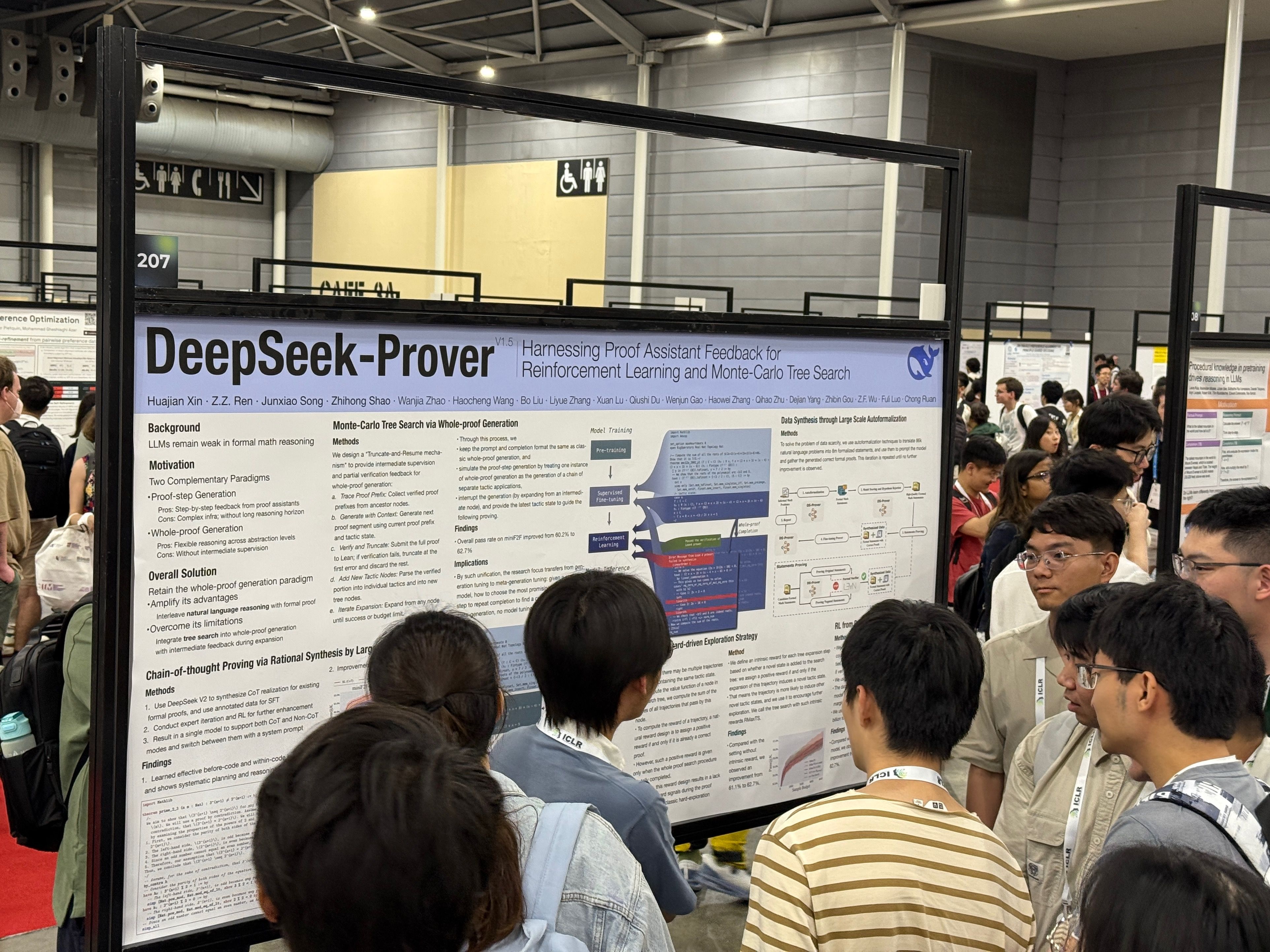

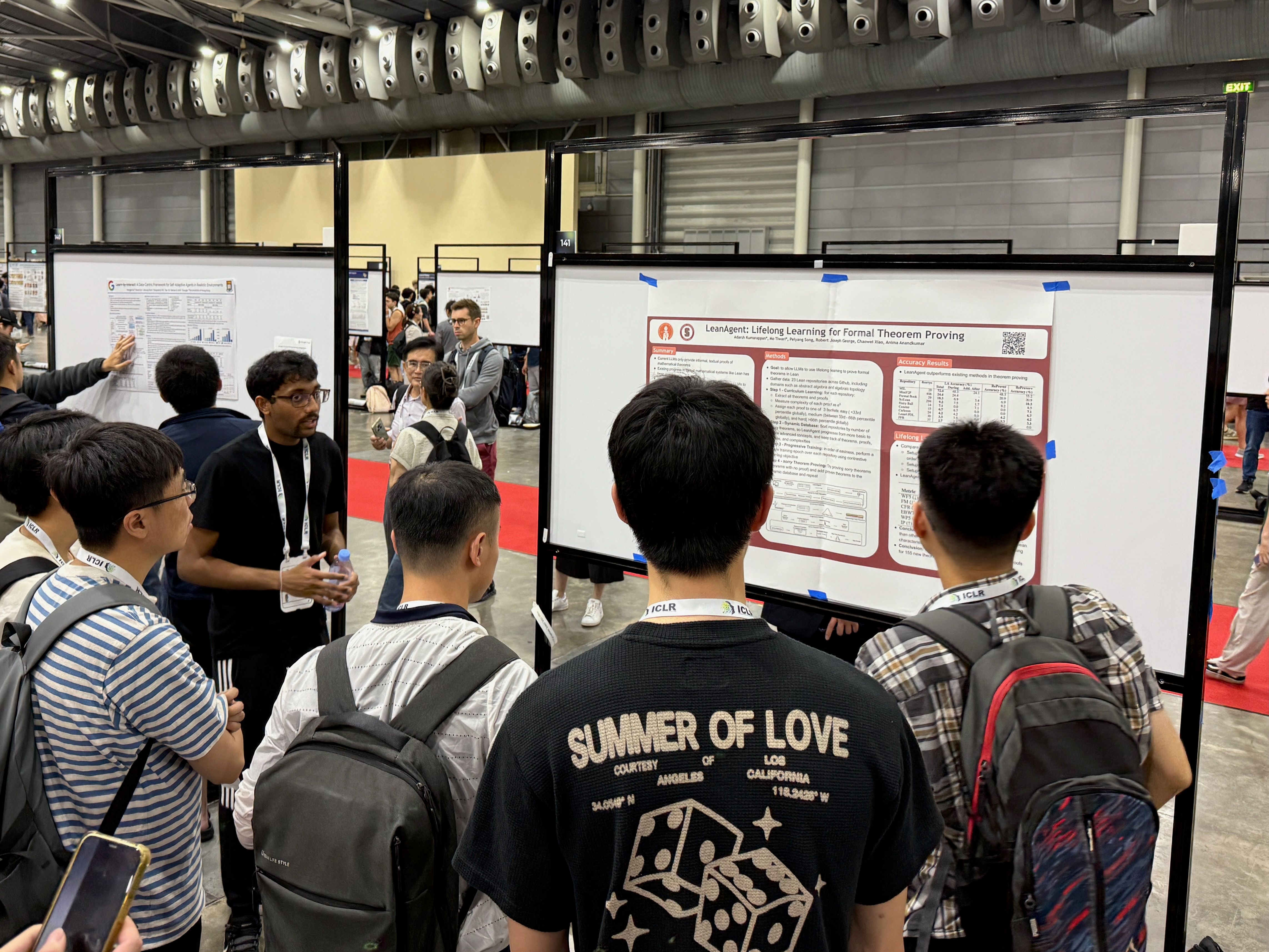

A few photos from the poster sessions throughout the week.

Hall 3 posters

Huajian Xin presents DeepSeek-Prover

Adarsh Kumarappan presents LeanAgent

This year, for the first time at ICLR after several previous iterations at NeurIPS, there was a Robot Learning workshop. Based on the ICLR conference app, over 700 people added the workshop to their agenda for today (only Agentic AI for Science and Sparsity in LLMs were more popular among Sunday’s 20 workshops), and the organisers estimated a peak attendance of around 300 in the room on the day.

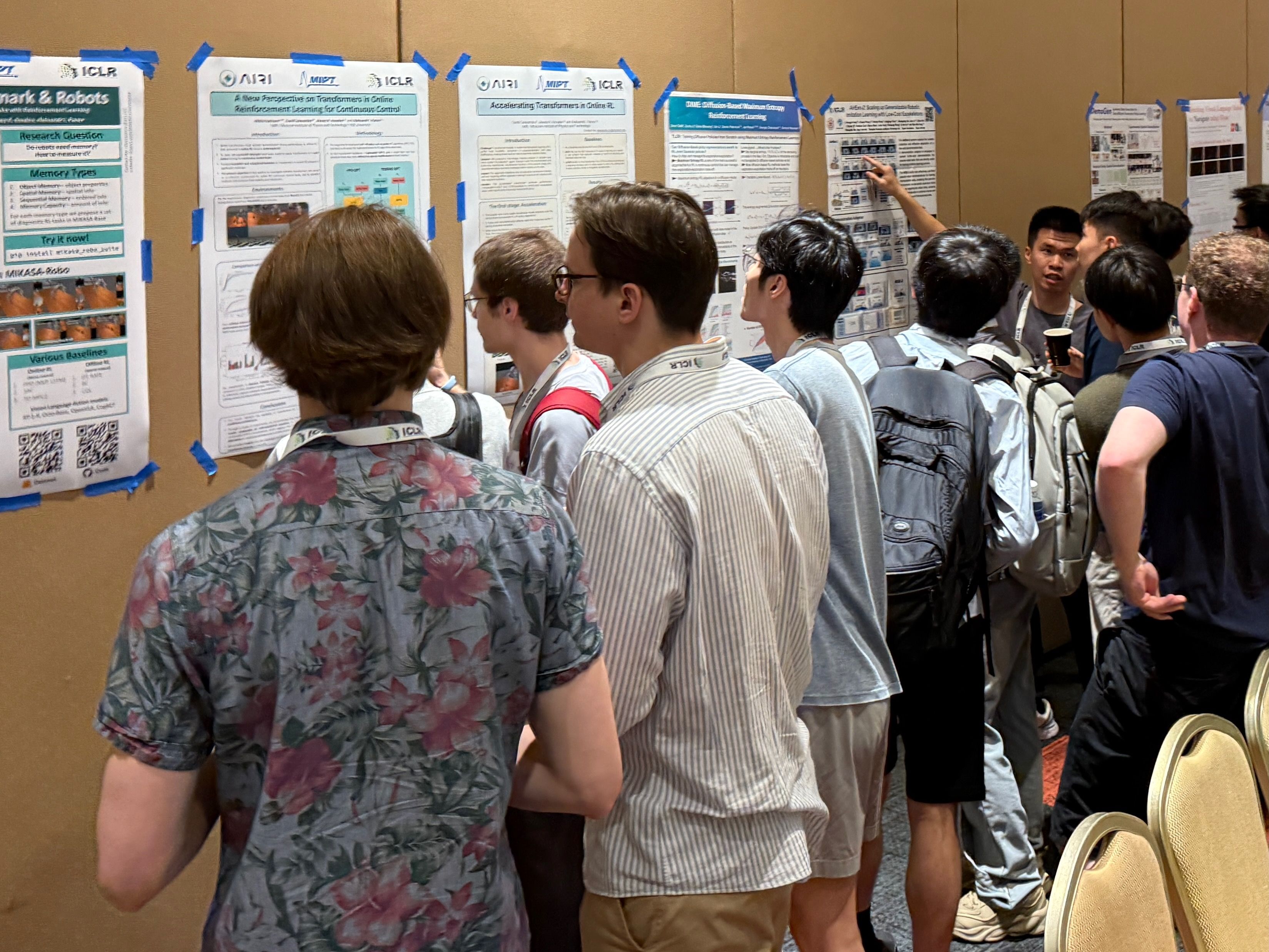

Robot Learning Workshop poster session

The best paper award for this workshop went to Instant Policy: In-Context Imitation Learning via Graph Diffusion by Vitalis Vosylius and Edward Johns. Vosylius’ talk showed the effectiveness of their approach for generalisation, robustness, learning efficiency. It also showed the ability to replicate demonstrations done using different embodiments, such as a robot arm copying the behaviour of a human arm.

The runner-up award went to Max Sobol Mark et al.’s Policy-Agnostic RL paper. There were many other interesting papers at this workshop, and the poster sessions were packed. Several of the poster presenters also had the opportunity to give a 5-minute oral presentation of their work.

Invited speaker Chelsea Finn presenting 'Data-Driven Pre-Training and Post-Training for Robot Foundation Models'

The workshop featured seven invited talks on topics including home robots, superhuman-level quadcopter drone control, robot foundation models, and 1v1 humanoid robot soccer, as well as a panel discussion.

There were several recurring themes throughout the talks and panels. Notably, some areas of agreement among many of the presenters:

- Practical, useful, general multitask robotics remains a very hard problem. Impressive-looking cherry-picked demo videos can give people outside the field an inaccurate and overly optimistic view of progress.

- Better evaluation standards are needed. Unlike in other areas of ML where performance on benchmark datasets can be measured for new methods with relative ease, the heterogeneity of robotic embodiments, sensor stacks, abstraction levels, task specifications and world representations complicate evaluations and comparability.

- Learning efficiency remains a hurdle to progress. There is no “internet of robot data” that can be pre-trained on, and real-world data collection is expensive. Using the right abstraction appears to be crucial: in both the quadcopter and robot soccer talks, the learning process became vastly more efficient when operating over higher-level abstractions (e.g. depth-map images or ground-truth state data) as opposed to raw camera pixel inputs.

- There are multiple promising approaches to robot learning — including imitation learning and reinforcement learning — and it’s likely that successful future systems will use a combination of these methods.

Panel discussion: Sandy Huang, Chaoyi Li, Niresh Dravin, Animesh Garg, and Edward Johns

There were also areas where opinions among speakers differed, or where there was more uncertainty expressed:

- What is the ‘best’ representation for robot learning in the real-world? Some of the presented work used point clouds, some used bounding boxes or key points on image data, and some used latent representations learned from raw sensor input. This is in contrast to the progress in language modelling, where all current mainstream approaches learn latent representations over sequences of tokens.

- What is the relative importance of research in simulation versus research using real-world embodied robots? Simulators have advanced significantly in recent years, but there remains a ‘sim-to-real’ gap, and testing robots in the real world is the only reliable way to see how big that gap is. On the other hand, real-world research is more expensive and labour-intensive, and there are many problems still to be solved in simulation environments.

- Why humanoid robots? On the one hand, humanoids are harder to work with than wheeled robots (as one speaker said, “once you introduce legs into the picture, everything becomes much more complicated”). On the other hand, the humanoid form factor could be valuable as a common platform for standardised robotics research.

Sponsor collab: a Booster Robotics humanoid in the FrodoBots driving seat

This was a stimulating workshop with many interesting talks and posters. For more information on the work presented here, and on past and future iterations of this workshop, see the robot learning workshop website.

Some photos from throughout the week.

Coffee break (hall 2)

Attendees had the option to pre-order bento boxes for lunch.

Bento box lunches

This year, ICLR was colocated with PetExpo. This meant wading through corridors with lots of cute dogs on the way to talks in the morning.

ICLR 2025, colocated with PetExpo

A few photos from the exhibit hall, where over 50 sponsors had booths.

Exhibit hall sponsor map

Top sponsors were mostly big tech firms or trading firms, but there were several other types of firms. Notably there were also several data/annotation companies.

A few of this year's quant/trading firm sponsors

Like at NeurIPS last year, there were several robotics-related sponsors.

Unitree and Booster robots moved around the exhibit hall

Tesollo's robot hand

Danqi Chen’s invited talk tackled a question that’s been top of mind for the academic ML community lately: in an era where frontier language model research makes use of enormous compute budgets, what is academia’s role?

Chen started by pointing out that all three of this year’s ICLR outstanding paper award winners present language model research done by academic labs, demonstrating that academia is still doing something right in this space, and highlighted some areas in which academia has important contributions to make:

- Understanding what works (and what doesn’t)

- Writing good papers (with openness and scientific rigour)

- Developing novel and compute-efficient solutions

- Contributing great open models to the broader community

Danqi Chen

More specifically, Chen identified three promising areas and highlighted some research in each:

- Working on small language models (1-10B parameters), and leveraging techniques like pruning and distillation

- Curating data for language model training, and developing an understanding of the impact of data quality on scaling laws

- Post-training open-weights models — including holistic, controlled studies on post-training research, taking into account that different base models can give different results.

Chen called out direct preference optimisation (DPO) as a “major win for academic research”, enabling computationally efficient post-training that is more accessible than the previous reinforcement learning from human feedback (RLHF) methods.

A recurring message throughout this talk was that there are many aspects of the language model training pipeline which are still poorly understood, and there is plenty of impactful research to be done within academic compute budgets (100-1,000 GPU hours) that can contribute to improving our shared understanding.

The ICLR test of time awards go to ICLR papers from ten years ago that have had a lasting impact on the field.

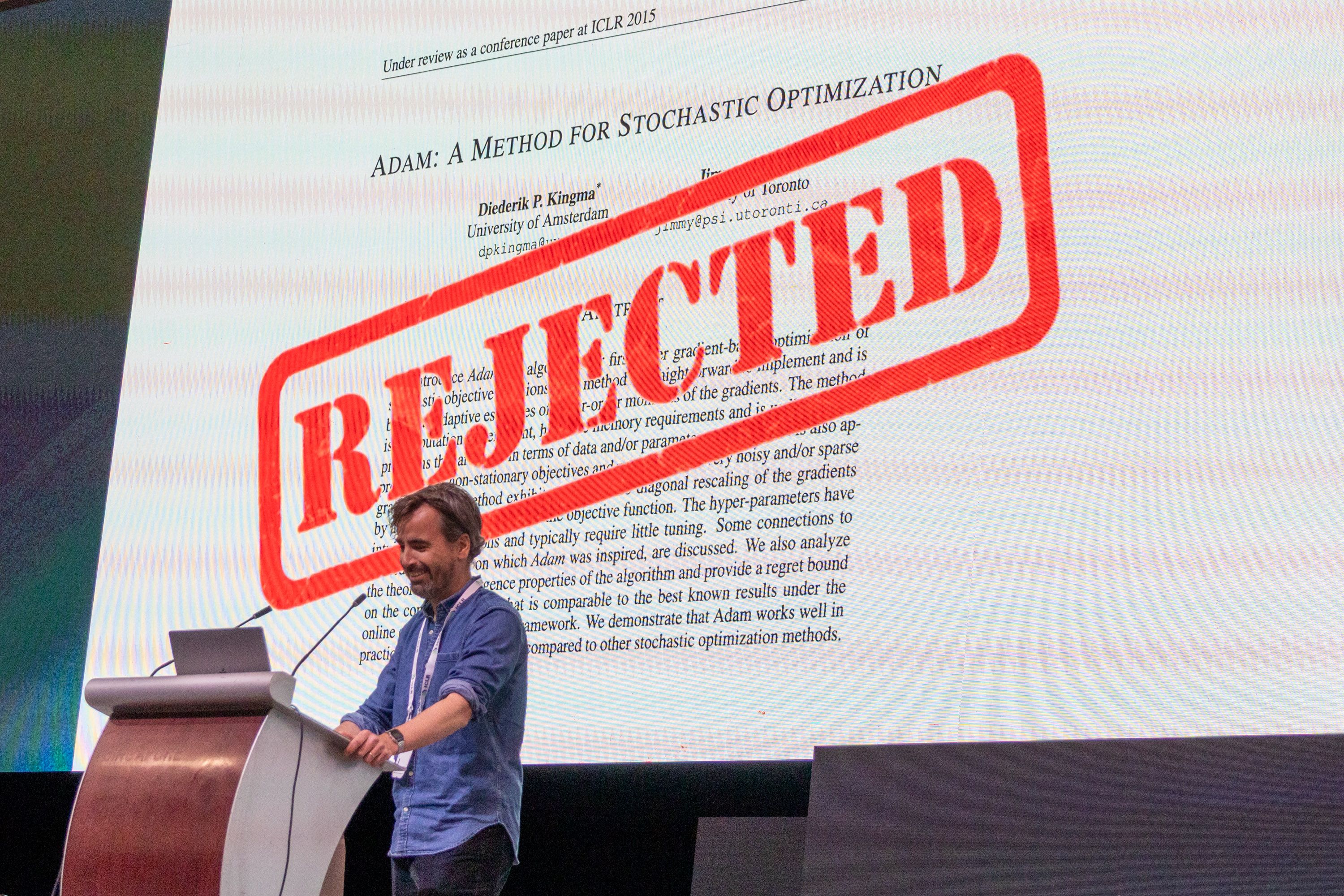

Adam

The 2025 ICLR test of time award winners were Diederik (Durk) Kingma and Jimmy Ba, for their 2015 ICLR paper Adam: A Method for Stochastic Optimization (arXiv). Both authors shared the presentation, with Kingma on stage and Ba on Zoom.

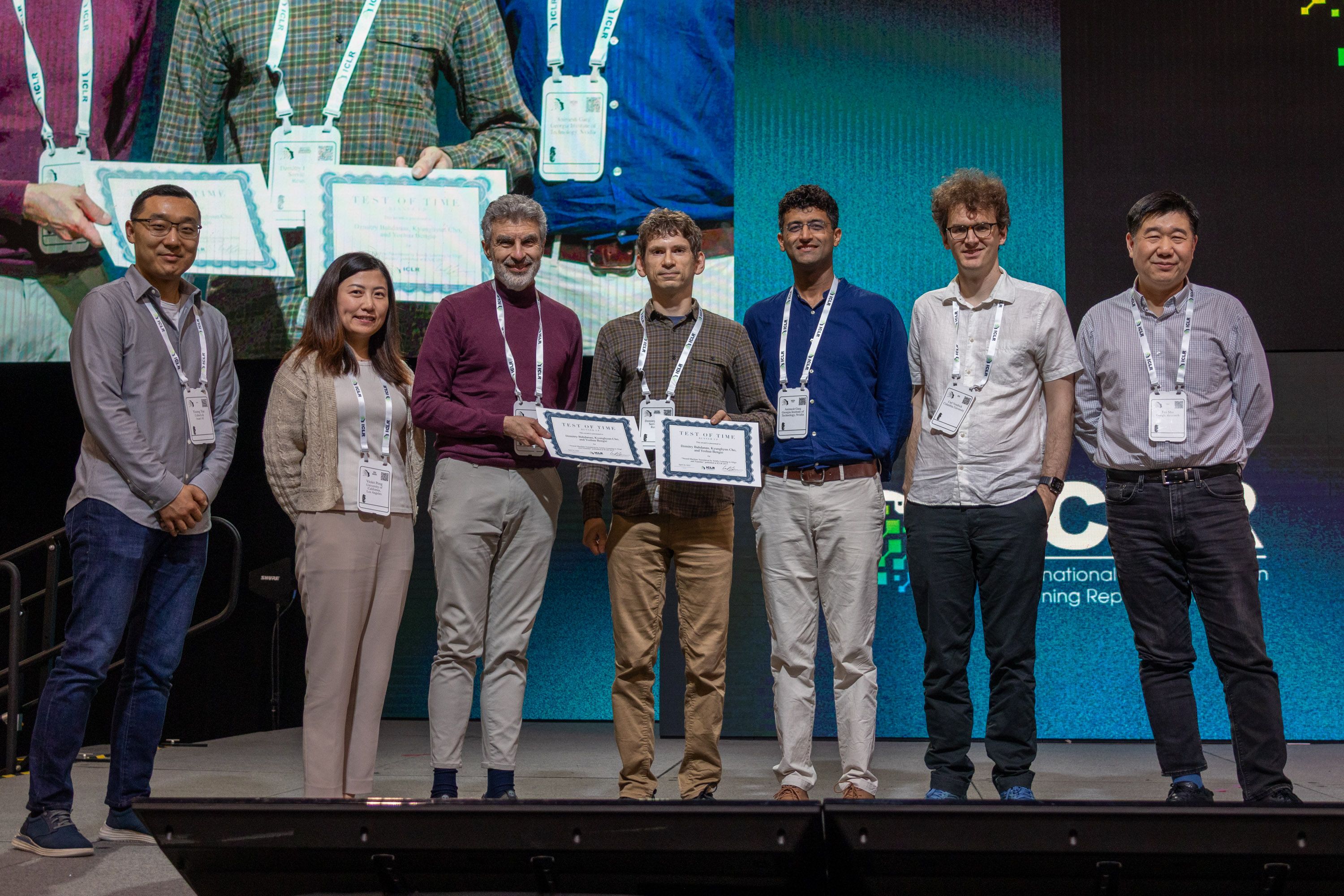

Durk Kingma accepting the test of time award from ICLR program chair Fei Sha

Building on Adagrad and RMSProp, the Adam optimisation algorithm updates parameter-specific learning rates, leading to much faster training than with vanilla stochastic gradient descent, and it remains the de-facto optimiser for deep learning ten years on (alongside the AdamW variant).

Based on our analysis of papers in OpenReview, the Adam optimiser or its AdamW variant are mentioned in over half of this year’s ICLR papers, and in almost 90% of ICLR 2025 papers that mention optimisers — with vanilla stochastic gradient descent making up most of the remaining 10%.

As Ba stated during the talk: “desperation drives innovation”.

Kingma and Ba met in London during an internship at Google DeepMind in 2014, and the Adam paper started its life as both an overdue course requirement for Ba, and a desire from Kingma’s for better optimisers to train the variational auto-encoders he had developed.

Despite its ubiquity today, Adam’s path to success was not straightforward.

Durk Kingma

Initially rejected from the main ICLR conference track, the authors sent a “fiery rebuttal email” to the ICLR organisers explaining that the reasons given for rejection had already been addressed in revisions made to their paper before the deadline. Eventually, it was accepted as a poster (but not granted an oral presentation).

Many enhancements to Adam have been proposed in the years since, and the authors highlighted two variants: AdamW (Loschilov and Hutter, ICLR 2019), which adds weight decay, and Adam-mini (Zhang et al, ICLR 2025), which reduces memory usage. The latter has a (poster at this year’s ICLR. Despite this, the standard version of Adam remains in widespread use.

Kingma ended the talk with some comments on the field of AI as a whole, expressing both hopes and concerns.

Neural Machine Translation

The runner-up of the test of time award was the paper Neural Machine Translation by Jointly Learning to Align and Translate (arXiv by Dzmitry Bahdanau, Kyunghyun Cho and Yoshua Bengio. The presentation was given by Bahdanau.

Another hugely impactful paper, this work is widely credited with introducing and popularising the attention mechanism at the core of the Transformer architecture that underpins so many of today’s state-of-the-art AI models.

Co-authors Yoshua Bengio and Dzmitry Bahdanau accepting their certificates, accompanied by the ICLR 2025 program chairs and general chair. Not pictured: Kyunghyun Cho.

Bahdanau highlighted contemporaneous work along the same lines including Memory Networks (Weston et al, also at ICLR 2015) and Neural Turing Machines (Graves et al, 2014), all of which went against the grain at a time when recurrent neural networks (RNNs) were the go-to architecture for sequence processing.

While developing the paper, Bahdanau had heard rumours of a big neural-net based translation project within Google, with a much larger compute budget than Bahdanau’s lab. This Google project turned out to be the sequence-to-sequence paper which later won the NeurIPS 2024 test of time award.

Once again, desperation drove innovation, and Bahdanau looked for ways to model long-term dependencies (i.e., reliably translate long sentences) that could perform well using only the 4 GPUs in his lab.

After a few failed attempts, one key idea made a big difference: letting the model “search” for required info across a sequence. Shortly before publication, the search terminology was replaced by the phrase “a mechanism of attention”.

This attention mechanism was adopted in the 2017 paper Attention is All You Need, which introduced the Transformer architecture, and Bahdanau credited this paper with four great ideas:

- Do attention at all layers, not just the top

- Attend to the previous layer for all positions in parallel

- Use many attention heads

- Ditch the RNN

Bahdanau also ended his talk with a discussion on the field of AI as a whole. At this point, as if on cue, a loud thunderstorm above the venue punctuated proceedings with ominous rumbles.

Risks, Concerns, and Mitigations

Both Kingma and Bahdanau ended their talks by expressing concerns about the field of AI, and the impact it could have if sufficient mitigations are not taken.

While acknowledging other categories of risks, they both focused on the potentially destabilising political and economic effects of widely-deployed powerful AI systems.

Kingma called for mitigations in the form of technological countermeasures, sensible AI regulations, and a strengthening of social support systems.

Dzmitry Bahdanau

Bahdanau highlighted the importance of private, local, and cheap-to-run AI systems, and called for researchers to treat amortised local inference cost as a key consideration when developing models.

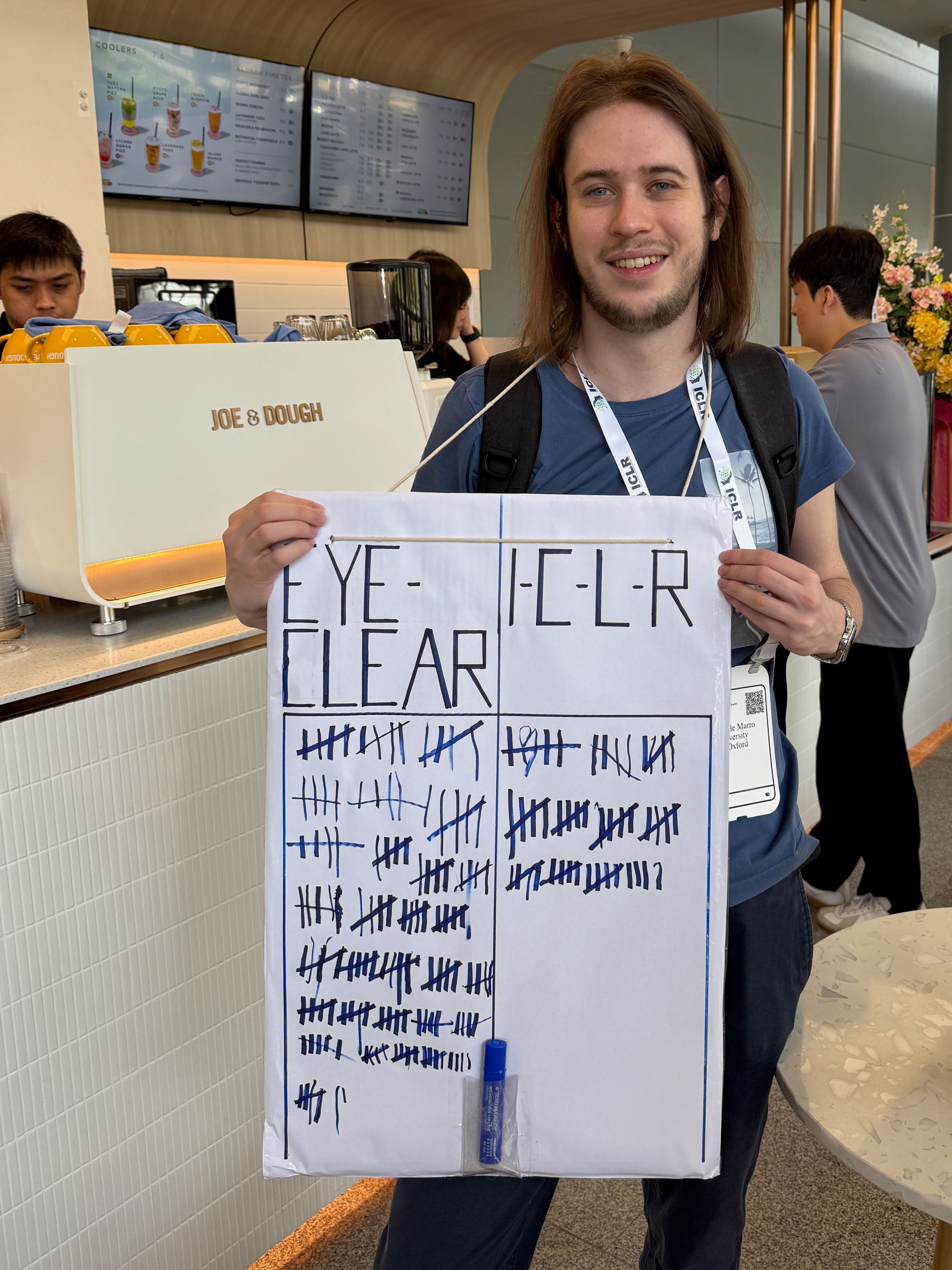

What’s the canonical way to pronounce ICLR?

Samuele Marro from the University of Oxford has been taking a data-driven approach.

Samuele Marro's data gathering project

So far, the results are largely in favour of “eye-clear” over “I-C-L-R”.

Update (29 April): at the final count, it’s 526 for “eye-clear” vs 245 for “I-C-L-R”. A victory for “eye-clear”, though almost a third of voters preferred the letter-based pronunciation.

There were three winners of the outstanding paper award this year, and three runner-up papers.

For the second time ever, ICLR is also awarding a test of time award for papers from ICLR 2015 which have had sustained impact. More on these tomorrow.

Listed below are the winners and runners-up of the outstanding paper awards.

Winners

Safety Alignment Should be Made More Than Just a Few Tokens Deep

Oral Presentation PaperTL;DR: We identify an underlying problem (shallow safety alignment) that makes current safety alignment vulnerable, and we also propose approaches for mitigations.

Authors: Xiangyu Qi, Ashwinee Panda, Kaifeng Lyu, Xiao Ma, Subhrajit Roy, Ahmad Beirami, Prateek Mittal, Peter Henderson.

Learning Dynamics of LLM Finetuning

Oral Presentation PaperTL;DR: The paper propose a novel learning dynamics framework to understand LLM’s behavior during finetuning (e.g., SFT, DPO, and other variants). Some counter-intuitive behavior can be well explained by the proposed framework.

Authors: Yi Ren, Danica J. Sutherland.

AlphaEdit: Null-Space Constrained Model Editing for Language Models

Oral Presentation PaperTL;DR: We propose a novel model editing method named AlphaEdit to minimize the disruption to the preserved knowledge during editing.

Authors: Junfeng Fang, Houcheng Jiang, Kun Wang, Yunshan Ma, Jie Shi, Xiang Wang, Xiangnan He, Tat-Seng Chua.

Honourable mentions

Data Shapley in One Training Run.

Oral Presentation PaperTL;DR: We develop a new notion of Data Shapley that requires only one model training run.

Authors: Jiachen T. Wang, Prateek Mittal, Dawn Song, Ruoxi Jia.

SAM 2: Segment Anything in Images and Videos.

Oral Presentation PaperTL;DR: We present Segment Anything Model 2 (SAM 2), a foundation model towards solving promptable visual segmentation in images and videos.

Authors: Nikhila Ravi, Valentin Gabeur, Yuan-Ting Hu, Ronghang Hu, Chaitanya Ryali, Tengyu Ma, Haitham Khedr, Roman Rädle, Chloe Rolland, Laura Gustafson, Eric Mintun, Junting Pan, Kalyan Vasudev Alwala, Nicolas Carion, Chao-Yuan Wu, Ross Girshick, Piotr Dollar, Christoph Feichtenhofer.

Faster Cascades via Speculative Decoding.

Oral Presentation PaperTL;DR: Faster language model cascades through the use of speculative execution.

Authors: Harikrishna Narasimhan, Wittawat Jitkrittum, Ankit Singh Rawat, Seungyeon Kim, Neha Gupta, Aditya Krishna Menon, Sanjiv Kumar.

Zico Kolter: Building Safe and Robust AI Systems

Invited TalkIn this year’s first invited talk, Zico Kolter started with a look back to the work presented at ICLR 2015 ten years ago. Out of a total of just 31 main-conference papers that year, several had a major impact on the field from today’s perspective — including the Adam optimiser and Neural Machine Translation papers, which won the Test of Time Award and will be discussed in more depth later this week.

Zico Kolter

Kolter presented years of his lab’s work through four eras: optimisation, certified adversarial robustness, empirics of deep learning, and AI Safety, and highlighted two recent pieces of work in the AI Safety category: antidistillation sampling (generating text from a model in a way that makes distillation harder but outputs are still generally useful) and safety pretraining (methods for incorporating safety guardrails early on in the model training process, not just in post-training).

Kolter ended with a call to action suggesting that AI Safety should be a key area of focus for academic research today, and emphasising his expectation that work in this area will have a significant impact on the future development of the field.

Song-Chun Zhu: Framework, Prototype, Definition and Benchmark

Invited TalkSong-Chun Zhu’s talk started from a philosophical vantage point, with a reflection on how “AGI” might be defined, and how any such definition hinges on the definition of what it means to be human.

Song-Chun Zhu presenting in Hall 1

Zhu then explored the space of cognitive agents through his three-dimensional framework which considers up of the agent’s cognitive architecture (how the agent works), its potential functions (what it can do), and its value function (what it wants to do).

He also summarised some of his lab’s research, including the development of TongTong, an agent trained in a simulated physical environment, as well as the Tong Test benchmark aimed at evaluating AGI.

Staying Dry

This year’s ICLR takes place at Singapore Expo, in halls 1-4.

The whole week is due to be warm, humid, and rainy at times, so it’s helpful to have a route to the conference venue that avoids outdoor walking where possible.

For those taking the MRT, the Expo MRT station’s Exit A connects directly to a covered walkway that leads into Singapore Expo.

MRT exit A, leading to the Expo

Changi City Point Mall

The Expo MRT station is also connected to Changi City Point mall: Exit F connects to the basement level of the mall.

MRT Exit F and the basement level of Changi City Point mall

There are some useful amenities here: the electronics store Challenger is right by the MRT exit, and there’s a pharmacy — Watsons — a bit further along.

The mall can be a good route to the conference venue.

For those at the Dorsett Changi City hotel, the best route is likely to go into the mall, down to B1, and into the MRT through Exit F. Then through the walkway, and out through Exit A to the Expo.

The underground walkway connecting MRT exit A with exits D & F

For those staying at the Park Avenue Changi hotel or just looking to cross Changi South Ave 1 when it’s rainy, it looks like the best route is to take Exit D into the MRT, and come out through Exit A.

MRT Exit D

MRT exits A, D, and F all have elevators as well as escalators, and can be accessed without needing to pass through ticket gates.

Map of the Expo MRT station exits

Taxis

Uber doesn’t operate in Singapore. Alternatives are the traditional city taxis, or ride-hailing apps Gojek or Grab.

There’s a taxi stand by Apex Gallery (near Hall 1), and a second taxi stand a little further away near Hall 6.

This year’s ICLR starts on Thursday. There’s a packed conference schedule alongside plenty of social events during the 5 days of the conference.

Early registration is today 2-7pm. On Thursday registration is open from 7:30am, and on the other conference days registration will start at 8am. For those who have pre-ordered lunch, there’s a separate line to pick up lunch vouchers.

The conference venue is right by the Expo MRT station, a 15-minute ride from Singapore’s Changi airport and half an hour from downtown Singapore.

It’s likely to rain this week. For tips on staying dry on the way to the venue, see this entry.