The ICLR test of time awards go to ICLR papers from ten years ago that have had a lasting impact on the field.

Adam

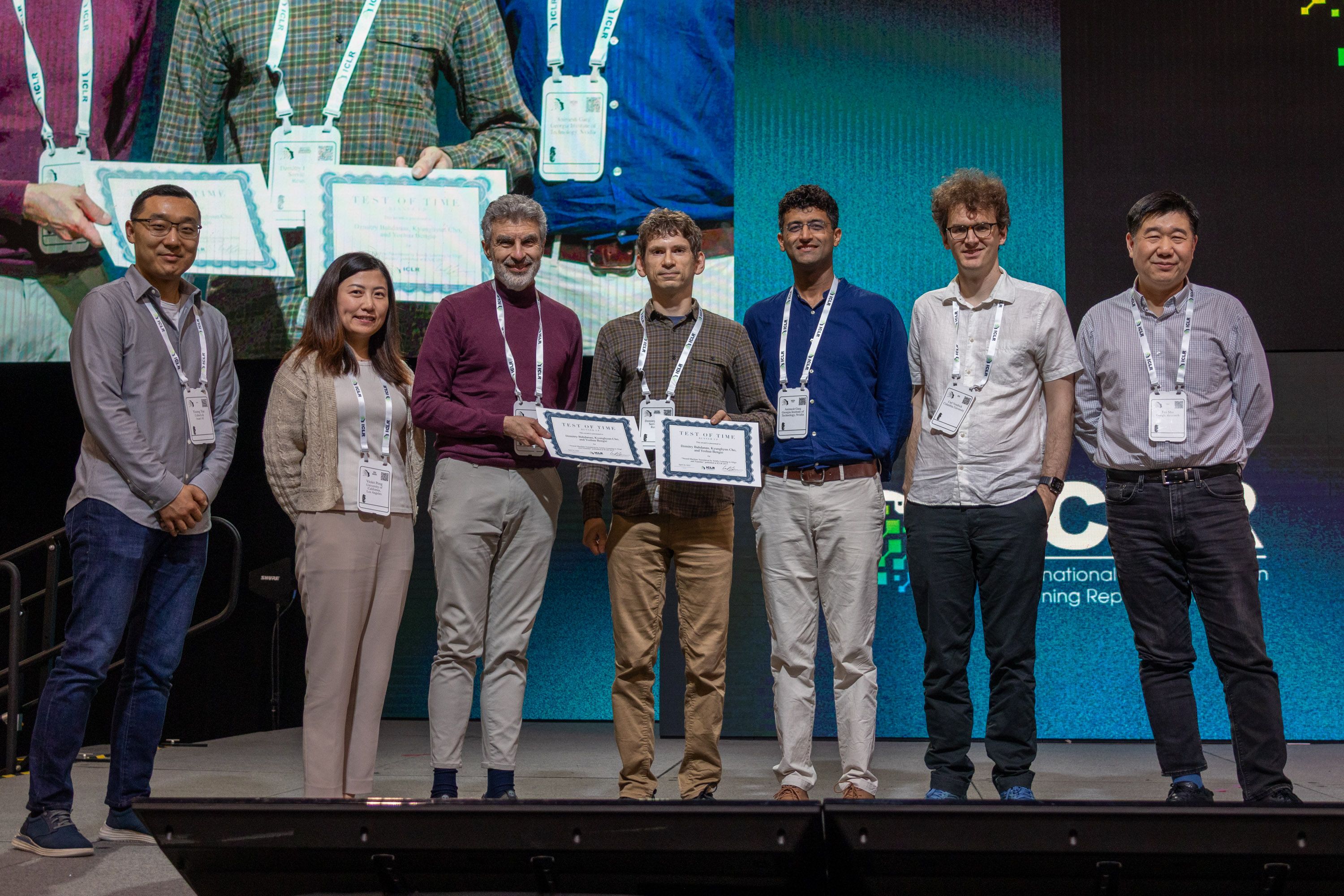

The 2025 ICLR test of time award winners were Diederik (Durk) Kingma and Jimmy Ba, for their 2015 ICLR paper Adam: A Method for Stochastic Optimization (arXiv). Both authors shared the presentation, with Kingma on stage and Ba on Zoom.

Durk Kingma accepting the test of time award from ICLR program chair Fei Sha

Building on Adagrad and RMSProp, the Adam optimisation algorithm updates parameter-specific learning rates, leading to much faster training than with vanilla stochastic gradient descent, and it remains the de-facto optimiser for deep learning ten years on (alongside the AdamW variant).

Based on our analysis of papers in OpenReview, the Adam optimiser or its AdamW variant are mentioned in over half of this year’s ICLR papers, and in almost 90% of ICLR 2025 papers that mention optimisers — with vanilla stochastic gradient descent making up most of the remaining 10%.

As Ba stated during the talk: “desperation drives innovation”.

Kingma and Ba met in London during an internship at Google DeepMind in 2014, and the Adam paper started its life as both an overdue course requirement for Ba, and a desire from Kingma’s for better optimisers to train the variational auto-encoders he had developed.

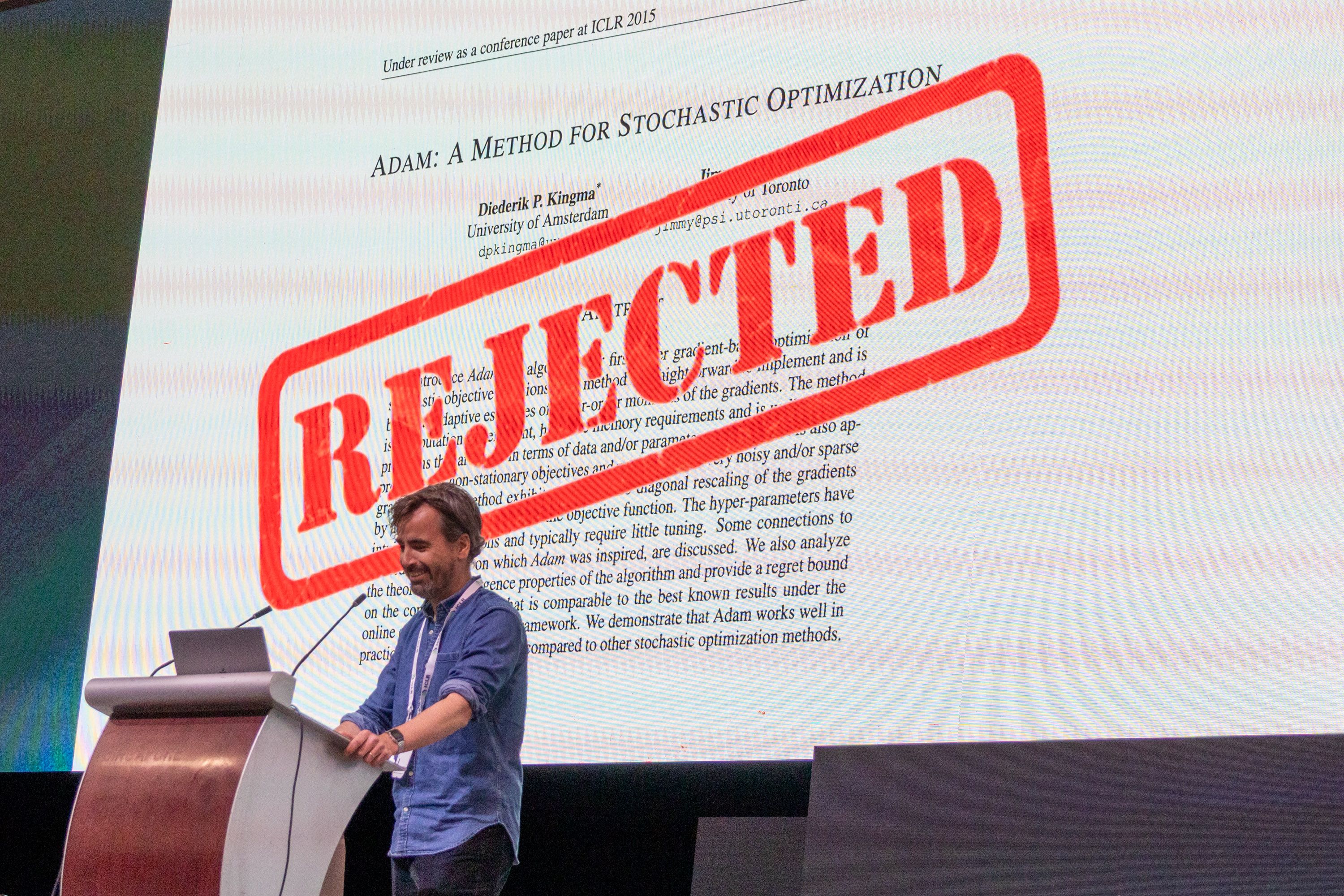

Despite its ubiquity today, Adam’s path to success was not straightforward.

Durk Kingma

Initially rejected from the main ICLR conference track, the authors sent a “fiery rebuttal email” to the ICLR organisers explaining that the reasons given for rejection had already been addressed in revisions made to their paper before the deadline. Eventually, it was accepted as a poster (but not granted an oral presentation).

Many enhancements to Adam have been proposed in the years since, and the authors highlighted two variants: AdamW (Loschilov and Hutter, ICLR 2019), which adds weight decay, and Adam-mini (Zhang et al, ICLR 2025), which reduces memory usage. The latter has a (poster at this year’s ICLR. Despite this, the standard version of Adam remains in widespread use.

Kingma ended the talk with some comments on the field of AI as a whole, expressing both hopes and concerns.

Neural Machine Translation

The runner-up of the test of time award was the paper Neural Machine Translation by Jointly Learning to Align and Translate (arXiv by Dzmitry Bahdanau, Kyunghyun Cho and Yoshua Bengio. The presentation was given by Bahdanau.

Another hugely impactful paper, this work is widely credited with introducing and popularising the attention mechanism at the core of the Transformer architecture that underpins so many of today’s state-of-the-art AI models.

Co-authors Yoshua Bengio and Dzmitry Bahdanau accepting their certificates, accompanied by the ICLR 2025 program chairs and general chair. Not pictured: Kyunghyun Cho.

Bahdanau highlighted contemporaneous work along the same lines including Memory Networks (Weston et al, also at ICLR 2015) and Neural Turing Machines (Graves et al, 2014), all of which went against the grain at a time when recurrent neural networks (RNNs) were the go-to architecture for sequence processing.

While developing the paper, Bahdanau had heard rumours of a big neural-net based translation project within Google, with a much larger compute budget than Bahdanau’s lab. This Google project turned out to be the sequence-to-sequence paper which later won the NeurIPS 2024 test of time award.

Once again, desperation drove innovation, and Bahdanau looked for ways to model long-term dependencies (i.e., reliably translate long sentences) that could perform well using only the 4 GPUs in his lab.

After a few failed attempts, one key idea made a big difference: letting the model “search” for required info across a sequence. Shortly before publication, the search terminology was replaced by the phrase “a mechanism of attention”.

This attention mechanism was adopted in the 2017 paper Attention is All You Need, which introduced the Transformer architecture, and Bahdanau credited this paper with four great ideas:

- Do attention at all layers, not just the top

- Attend to the previous layer for all positions in parallel

- Use many attention heads

- Ditch the RNN

Bahdanau also ended his talk with a discussion on the field of AI as a whole. At this point, as if on cue, a loud thunderstorm above the venue punctuated proceedings with ominous rumbles.

Risks, Concerns, and Mitigations

Both Kingma and Bahdanau ended their talks by expressing concerns about the field of AI, and the impact it could have if sufficient mitigations are not taken.

While acknowledging other categories of risks, they both focused on the potentially destabilising political and economic effects of widely-deployed powerful AI systems.

Kingma called for mitigations in the form of technological countermeasures, sensible AI regulations, and a strengthening of social support systems.

Dzmitry Bahdanau

Bahdanau highlighted the importance of private, local, and cheap-to-run AI systems, and called for researchers to treat amortised local inference cost as a key consideration when developing models.