NeurIPS 2024 was, once again, the largest NeurIPS ever. In fact, it was possibly the largest in-person academic AI conference ever.

From systems pushing boundaries with additional inference-time compute, to researchers exploring beyond attention-based model architectures — this year’s conference featured exciting new trends from across the field.

Now that the conference has wrapped up, we’ve summarised some of the themes we spotted this year.

Sunday’s workshops included Machine Learning for Systems, Time Series in the Age of Large Models, Interpretable AI, and Regulatable ML. The workshop programme is always varied, allowing researchers to self-organise into their niches and share more cutting-edge research than in the main conference.

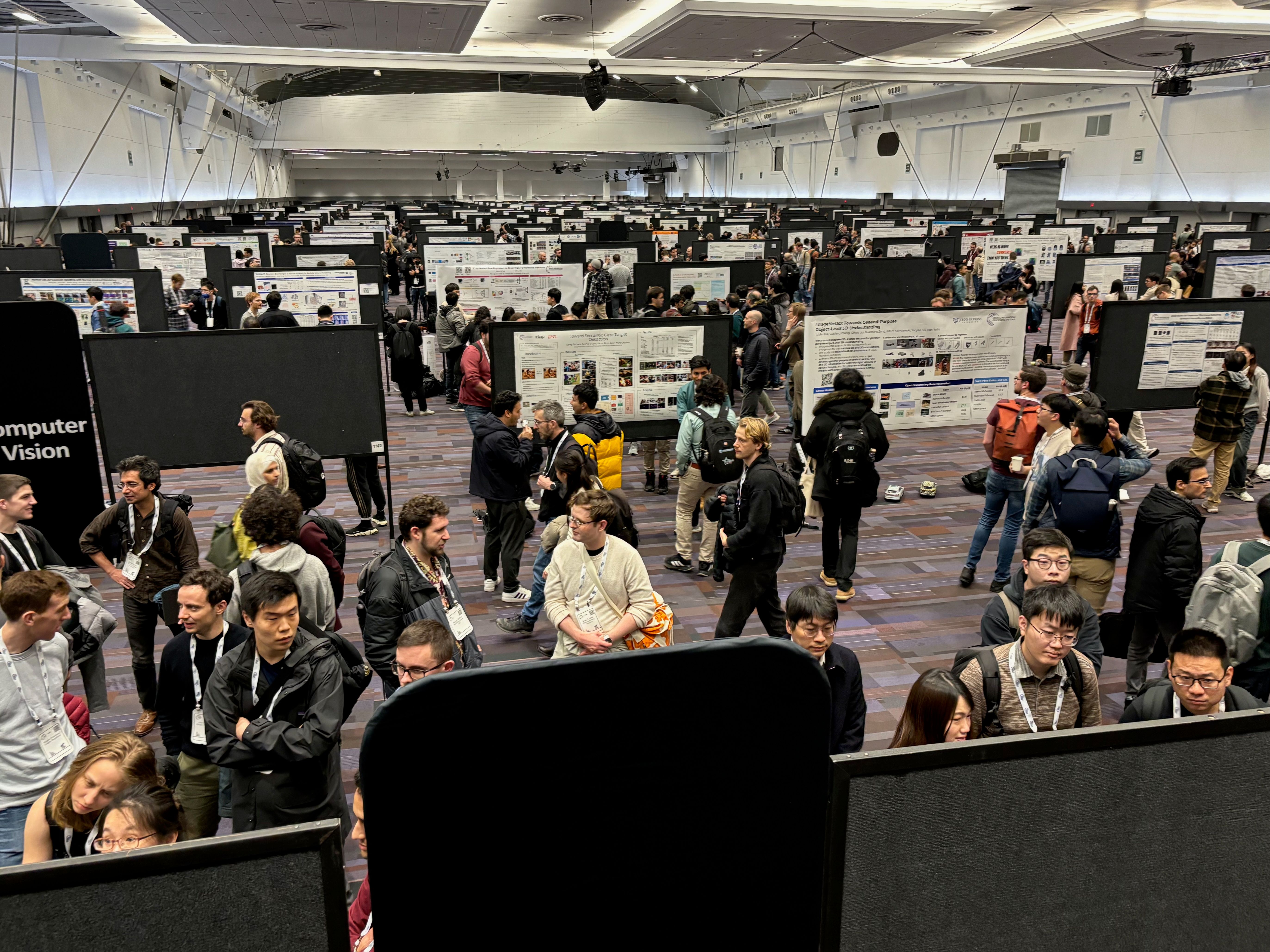

First floor of the west building

In the Intrinsically Motivated Open-ended Learning workshop, sci-fi author Ted Chiang gave a talk titled “Speculations on educating artificial lifeforms”, which included some of his reasoning around the digital entities in his short story, “The Lifecycle of Software Objects”.

There were presentations from startups, too, laying out their plans and visions. Each of these startups is approaching AI in its own way.

David Ha of Tokyo-based Sakana AI presented at the Workshop on Foundation Model Interventions. Sakana is aiming to build nature-inspired AI, leveraging ideas like evolution and collective intelligence.

Zenna Tavares of NY/Boston-based Basis spoke at the System 2 Reasoning at Scale workshop, alongside Cornell’s Kevin Ellis. Basis’ three-year goal is to build “the first AI system truly capable of everyday science” through project MARA: Modeling, Abstraction, and Reasoning Agents.

Basis’ talk was followed by a presentation from François Chollet on the ARC Prize, a challenge for abstraction and reasoning, first set in 2019, that recently concluded its latest $1m prize round.

Chollet stated that he sees the ARC Prize as a tool for directing research interest more than a benchmark. He said he believes discrete program search solutions for ARC are still under-explored, and is confident that the community is on track to “solve” the prize very soon. When that happens, he will have another challenge ready.

For more detail, see the ARC Prize 2024 Technical Report.

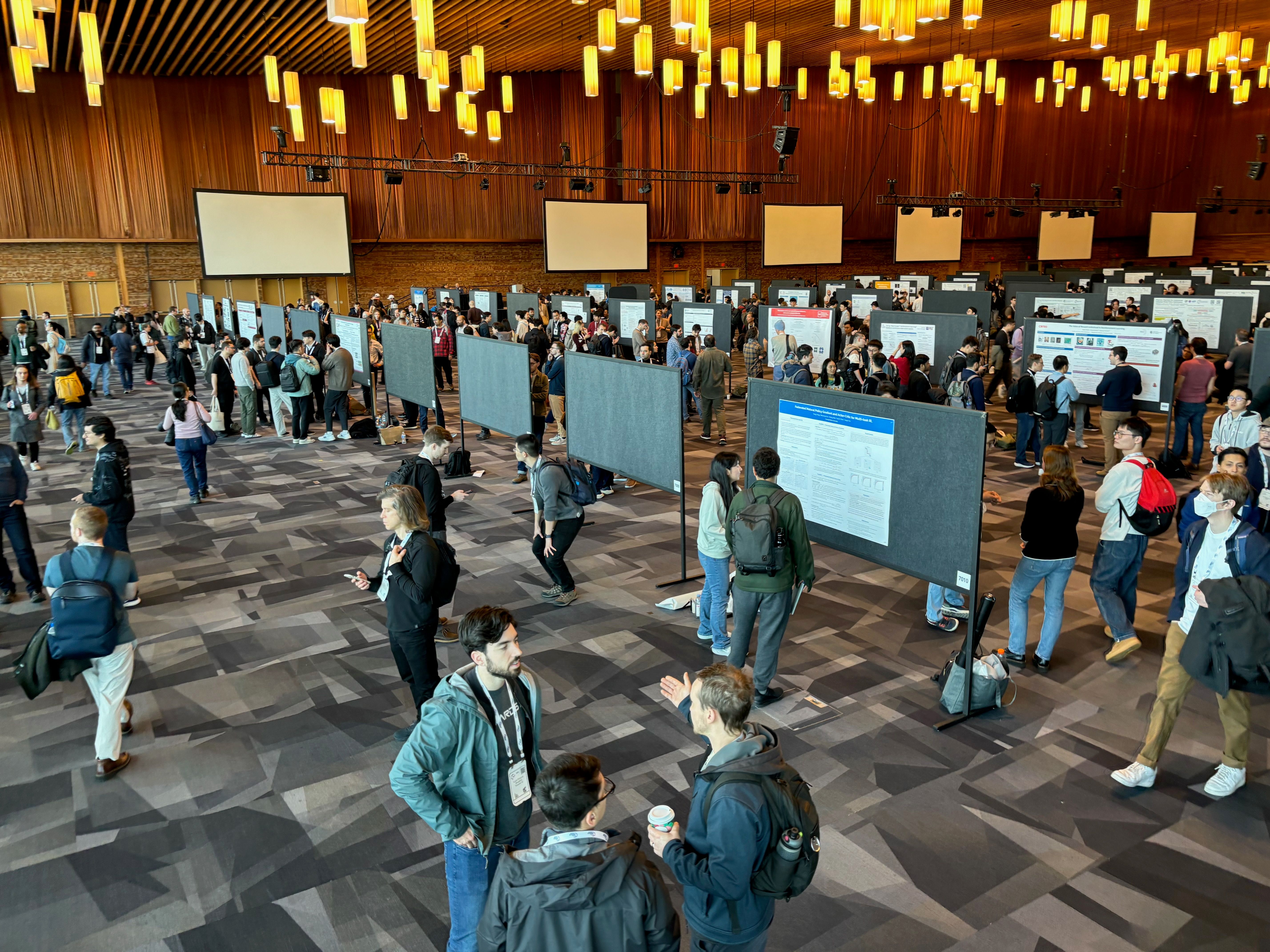

Second floor of the west building

The conference wrapped up today, concluding with the second day of the 56 workshops and 16 competitions.

This is the last post in our daily NeurIPS 2024 coverage, to be followed by a highlights post.

The Math-AI workshop ended with three contributed talks (by the workshop’s best-paper-award-winning authors), and a poster session.

David Brandfonbrener presented VerMCTS: Synthesizing Multi-Step Programs using a Verifier, a Large Language Model, and Tree Search.

David Brandfonbrener

In VerMCTS, a program verifier is used as a reward signal for monte carlo tree search (MCTS). By applying the verifier to partial programs which are iteratively expanded, LLMs can be effectively partnered with formal verifiers to generate verified programs.

Sean Welleck presented Inference Scaling Laws: An Empirical Analysis of Compute-Optimal Inference for LLM Problem-Solving.

Sean Welleck

This paper centres around the question: how do we best use a given inference compute budget?

It does this by considering three key considerations:

- What is the optimal model size?

- What is the best meta-generation strategy?

- If compute limits are removed, how far can inference strategies take us?

Interestingly, the best answer isn’t to always use the largest possible model. Welleck noted that “smaller models with advanced inference are often optimal”, and pointed the audience to his meta-generation tutorial from earlier this week.

Nelson Vadori presented Learning Mathematical Rules with Large Language Models.

Nelson Vadori

This paper assesses generalisation abilities of LLMs by fine-tuning open-source LLMs on synthetic data.

It concludes that fine-tuning models on some specific mathematical rules allows them to be reused in the context of word problems (bottom-up generalisation) and training on a large and diverse set of tokens improves the models’ ability to generalize specific rules such as distributivity and manipulating equations (top-down generalisation).

This final set of talks was followed by a poster session.

Poster session

There was a varied selection of posters including topics like ML for formal mathematics, informal mathematics, and applications in mathematical physics, as well as some new benchmarks.

For a full list of accepted papers, see the workshop website.

There will be one more post on this blog with some highlights from Sunday’s workshops, followed by an overall conference highlights post.

Three more invited talks from the Math-AI workshop.

Google DeepMind’s Adam Zsolt Wagner presented a mathematician’s perspective, and spoke about using machine learning as a tool to find good constructions in mathematics — for example, to identify counterexamples to conjectures.

In contrast to the FunSearch method, which searches through the space of programs that generate solutions, Wagner’s proposed approach searches through solution space directly.

Adam Zsolt Wagner

By choosing the right representation and tokenising it using a (small) transformer-based language model, and alternating between local search (making small changes to existing constructions) and global search (training a model on the best constructions and generating more constructions like them), interesting new constructions can be found that are difficult for mathematicians to find manually.

For more details, see Wagner’s PatternBoost paper.

Wagner’s conclusions:

- Even the simplest learning methods can produce good results in mathematics

- Slightly more complex algorithms can be useful tools for mathematicians

- There are lots of low hanging fruits in the area, the next years should be really exciting!

Jeremy Avigad, director of the Hoskinson Center for formal mathematics at CMU, started his talk by presenting a history of automated reasoning and interactive theorem provers.

Avigad states that machine learning can help interactive theorem proving in the following ways: premise selection, search, and copilots.

Conversely, interactive theorem proving is useful for machine learning because it provides a clear measure of success (it’s hard, but correct proofs are verifiable).

Jeremy Avigad

Avigad highlighted the importance of making AI mathematics tools useful to mathematicians, and mentioned miniCTX and ImProver as two research works with this direction in mind .

He also recommended Jason Rute’s talk on The Last Mile (pdf) for more on how to make AI theorem provers useful to real users.

Stanford’s James Zou proposed that instead of thinking of AI as a tool, it’s sometimes useful to think of it as a partner.

He gave an overview of two research projects done by his lab — The Virtual Lab, and SyntheMol.

In the former, a “virtual lab” was set up, consisting of a set of “LLM agents”. Each agent, built on top of GPT-4, is given a specific role (e.g. biologist) and access to domain-specific tools (e.g. AlphaFold). The lab then conducts large numbers of virtual “meetings”, with some input from human researchers.

This process was used to successfully design new SARS-CoV-2 nanobodies, which were then experimentally validated.

James Zou

In SyntheMol, generative models were used to create “recipes” for new, easy-to-synthesize antibiotics (in contrast to some prior approaches, where new molecules were designed directly, but turned out to be hard to synthesize). Of the 70 recipes sent to a lab, 58 were successfully synthesised and experimentally validated against diverse bacteria.

These three invited talks gave an

OpenAI’s Noam Brown began by stating that progress in AI from 2019 to today is mostly down to scaling data and compute; specifically training compute. While this can keep working, it’s expensive.

He introduced the solution — test-time compute — by first talking about his experience building poker bots almost a decade ago.

After Claudico, his CMU team’s initial attempt at a superhuman poker bot, lost against human poker players, he had a realisation: human players had less pre-training data (fewer hands played), but would spend more time thinking before they made a game decision. The bots, on the other hand, acted almost instantly.

Building in extra “thinking time” (inference-time search) led to success at 2-player poker with Libratus in 2017 and 6-player poker with Pluribus in 2019. In a way, this method continued a decades-long tradition of inference-time compute in game bots: TD-Gammon for backgammon, Deep Blue for chess, and AlphaGo for Go.

By having the model think for 20 seconds in the middle of a hand, you get roughly the same improvement as scaling up the model size and training by 100,000x.

Noam Brown on poker bots

Over the first three years of his PhD, Brown had managed to scale up the model size and pre-training for his poker bot by 100x. Adding more test-time compute was the key to a system that could beat human players within a reasonable overall budget.

Pluribus cost under $150 to train, and could run on 28 CPU cores. This was significantly cheaper than it would have been to build a system with minimal test-time compute purely scaled through training.

Noam Brown

Since then, there has been other research establishing similar trade-offs — “scaling laws” — between train-time and test-time compute in other games.

OpenAI’s o1 model applies this technique to reasoning in natural language, using reinforcement learning to generate a chain of thought before answering. Brown described o1 as “a very effective way to scale the amount of compute used at test time and get better performance”.

He noted that while the o1 approach is good, it might not be the best way to do this, and recommended that other researchers seriously consider the benefits of additional test-time compute in natural language reasoning. For researchers wanting to get an intuition for the process behind o1, he pointed to the corresponding release blog post — especially the chain of thought examples published there.

This approach works well for some problems, but not as well for others, and Brown introduced the concept of a “generator-verifier gap” to categorise promising problem domains.

The generator-verifier gap / Noam Brown's slides

Brown clearly stated that there is still room to push inference compute much further, and that “AI can and will be more than chatbots”. Specifically, he expects that AI with additional inference compute will “enable new scientific advances by surpassing humans in some domains while still being much worse in others”.

Brown’s talk was followed by a highly-anticipated panel session. There was a line outside the room with people waiting to get in throughout.

The panel included mathematics professor Junehyuk Jung as well as invited speakers Dawn Song, Noam Brown, and Jeremy Avigad, and was moderated by Swaroop Mishra from Google DeepMind.

Panel session in the neurips 2024 math-ai workshop

A few highlights from the panel discussion:

- Progress has been extremely fast: in 2022, no one would have believed that the MATH benchmark would have been saturated by 2024.

- Advice for anyone designing new benchmarks: have a very wide gradient of difficulty. Include some low-end examples (what models can do today) and some high-end examples (things we’re not even sure are possible).

- On rating current AI mathematics approaches from 0 to 10: “with a little bit of human intervention we are already at 7 or 8 out of 10. With zero human intervention… less than one”.

- OpenAI’s research is focused on informal (rather than formal) mathematics: they expect progress in this area to be more generally useful.

- On autoformalisation: difficult and (in Lean) limited to the scope of mathlib.

- There were general expectations that mathematical practice will change in years to come.

- Even with AI systems that are better at theorem-proving than the best humans, humans would still have an important role to play. This has already been seen in chess and Go, where humans can be effectively paired with AI systems.

Summaries of the workshop’s afternoon sessions will be posted on this blog over the next few days.

The Math-AI workshop was well-attended, full of optimism, and featured several high-profile speakers from across machine learning and mathematics.

Schedule for the day

The first invited talk was given by UC Berkeley’s Dawn Song, and was broadly based on an upcoming position paper titled “Formal Mathematical Reasoning: A New Frontier in AI”.

The view taken in the paper and talk is that AI-based formal mathematical reasoning has reached an inflection point, and significant progress is expected in the next few years, through the flywheel loop where “human-written formal math data leads to more capable LLMs, which in turn eases the creation of more data”.

For more background on this area, read our introduction to ML and formal mathematics.

Dawn Song

Song laid out four main directions for future work, data, algorithms, tools for human mathematicians, and code generation/verification.

Song also emphasised the importance of benchmarks, and the paper proposes a new taxonomy inspired by the autonomy levels for self-driving cars. For theorem proving the proposed system ranges from level 0, “checking formal proofs”, to level 5, “solving problems and discovering new math beyond the human level”. Level 3 is the highest level covered by existing evaluations.

Similar taxonomies are defined for informal reasoning, autoformalisation, conjecturing, and code generation and verification.

The second invited talk was given by Apple’s Samy Bengio.

Bengio’s talk focused on one question: can transformers learn deductive reasoning?

Samy Bengio

He gave an overview of a new measure, globality degree, proposed by his lab in a NeurIPS paper this year, which aims to capture the learnability of tasks by transformers.

This research showed that tasks with high globality degree cannot easily be solved by transformers trained through SGD, and that naive approaches such as “agnostic scratchpads” (general meta-generation techniques, like chain-of-thought, not designed with these types of tasks in mind) do not mitigate this.

To address this the paper introduced a new approach, inductive scratchpads, which can help transformers learn problems with high globality degree.

This blog will slow down over the weekend given the busy workshop schedule. For now, a few photos from throughout the week…

The market for NeurIPS-relevant jobs is clearly doing well, with a packed job board alongside the many sponsor companies using the expo hall for recruitment.

One of the job boards at the conference

Free lunches were provided; a great help for attendees trying to get through hundreds of posters in the 11am-2pm slot.

A violation of the no-free-lunch theorem?

Controversially, no NeurIPS mugs this year! Thermoses instead.

The NeurIPS 2024 thermos

Unusually, two papers received this year’s test of time award, “given the undeniable influence of these two papers on the entire field”.

Generative Adversarial Nets

Authors: Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, Yoshua Bengio (original paper)

The GAN paper kick-started the interest in generative AI, and first author Ian Goodfellow’s 2016 “Deep Learning” book was likely read by many in the audience.

Unfortunately, Goodfellow couldn’t be present in person, due to the debilitating effects of Long COVID. He attended remotely and gave some brief comments, highlighting the severe effects of Long COVID and suggesting that anyone wanting to help prevent the spread of COVID and other diseases considers supporting the Public Health Action Network, a non-profit he cofounded.

The rest of the talk was given by co-author David Warde-Farley.

David Warde-Farley presenting a historical perspective on the GAN paper

Warde-Farley provided some historical context, and told the story of how GANs went from idea to NeurIPS paper submission in just 12 days.

He credited the intellectual environment in the lab, the spirit of collegiality, then-intern Jean Pouget-Abadie’s background in game theory, and the lab’s Theano system (allowing flexible GPU-accelerated programming) with their unusual productivity.

Interestingly, given its eventual influence, the GAN paper was not an immediate success. It did not get selected for a spotlight or oral session, and Warde-Farley admitted that the authors themselves “didn’t think much of the paper either” at the time.

In the following years, the paper became more prominent as other researchers built upon the core technique, progressing all the way from generating 28x28 greyscale images to today’s high-resolution photorealistic generations.

Sequence to Sequence Learning with Neural Networks

Authors: Ilya Sutskever, Oriol Vinyals, Quoc V. Le (original paper)

All three co-authors attended in person to accept their award, and first author Ilya Sutskever presented the talk.

With this award Sutskever completed a NeurIPS hat trick of sorts — having also been a co-author on the 2022 and 2023 NeurIPS test of time award papers.

Ilya Sutskever, Oriol Vinyals, and Quoc Le, receiving their award certificates

Sutskever commented on what the authors got right in hindsight (betting on deep learning, autoregressive models, and scaling), and what they got wrong (using pipelining and LSTMs). Sutskever went as far as calling the LSTM architecture “ancient history”, describing it as “what poor ML researchers did before transformers”.

Sutskever also speculated on the future, stating that “pre-training as we know it will end”. His reasoning: although compute is growing, data is not, and we’ve reached “peak data”.

In the short term he expects synthetic data and inference-time compute to help. In the long term, he believes that superintelligence is “obviously where this field is headed”. He expects superintelligence to have agency, be able to reason, have a deep level of understanding, and be self-aware.

Sutskever declined to make exact predictions or state timelines, but his closing comments generally had a cautious and somewhat concerned undertone, highlighting that “the more [a system] reasons, the more unpredictable it becomes.

Stochastic Taylor Derivative Estimator

OralFirst author Zekun Shi presented this paper; winner of a NeurIPS 2024 best paper award.

Zekun Shi presenting 'Stochastic Taylor Derivative Estimator'

The paper introduces a new amortisation scheme for autodiff on higher-order derivatives, replacing the exponential scaling inherent in a naive approach with a method which scales linearly (in k, for derivatives of order k).

Learning Formal Mathematics From Intrinsic Motivation

OralFirst author Gabriel Poesia presented a new method to learn formal mathematics in the Peano environment through intrinsic motivation.

Gabriel Poesia presenting 'Learning Formal Mathematics From Intrinsic Motivation'

The approach uses a teacher-student setup to jointly learn to generate conjectures and prove theorems.

The “teacher” proposes new goals (conjectures), for which the “student” then attempts to find proofs through tree search.

Starting with only the basic axioms and driven only by intrinsic rewards, the system’s performance on extrinsic measures (success at proving basic human-written theorems) improved over the training process.

This method does not require any human data, and it seems likely that with more compute the method would continue to improve.

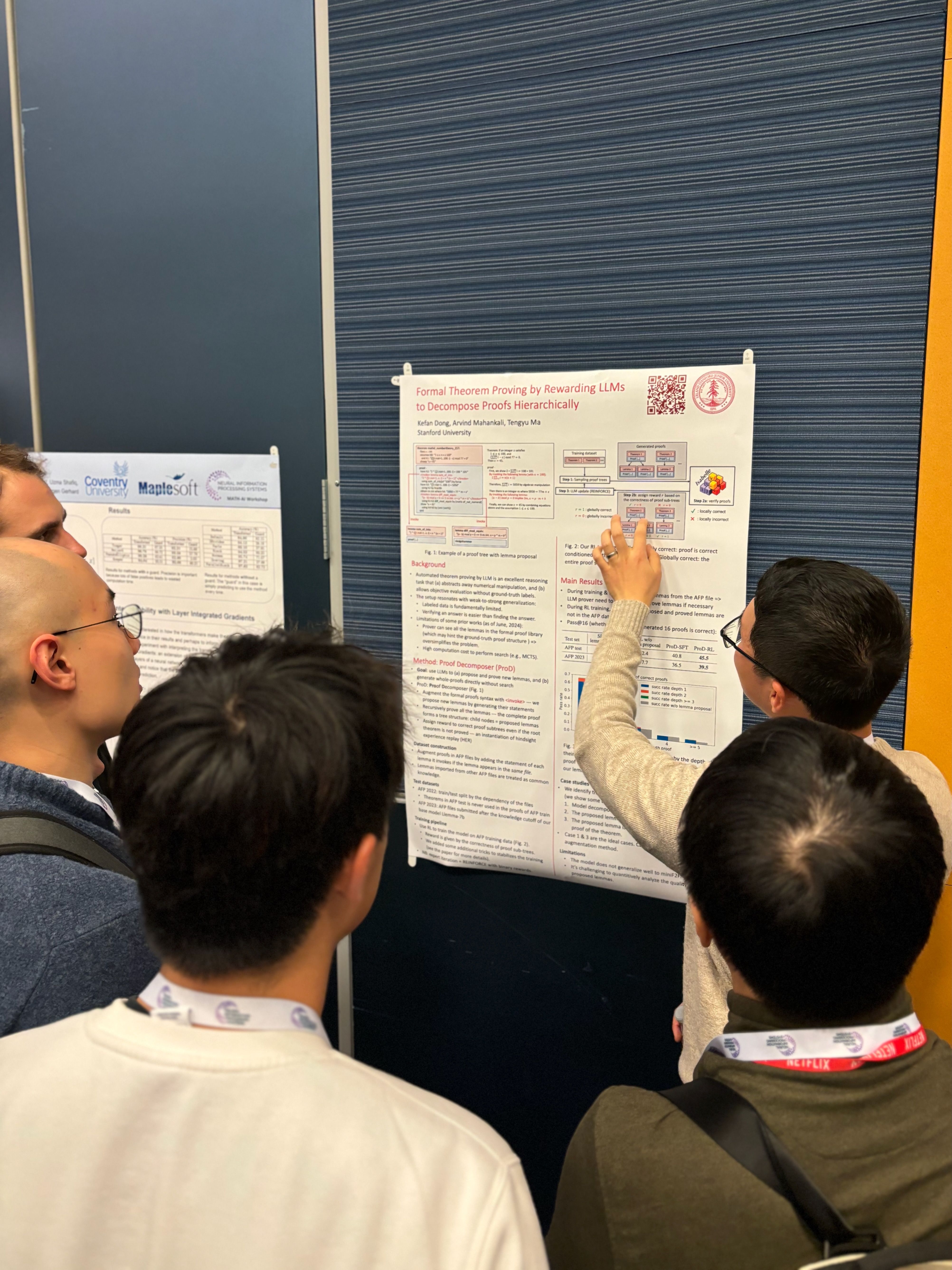

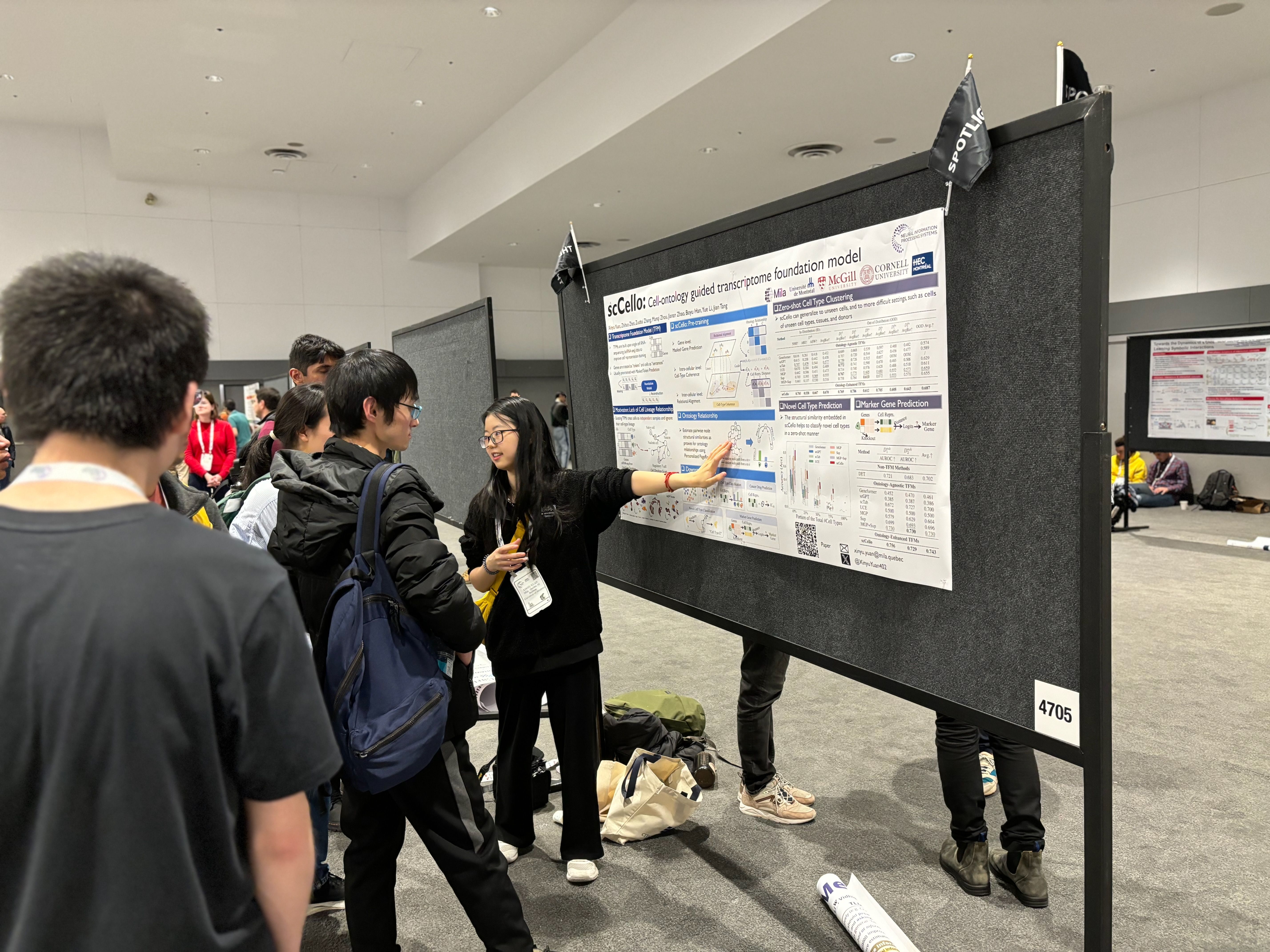

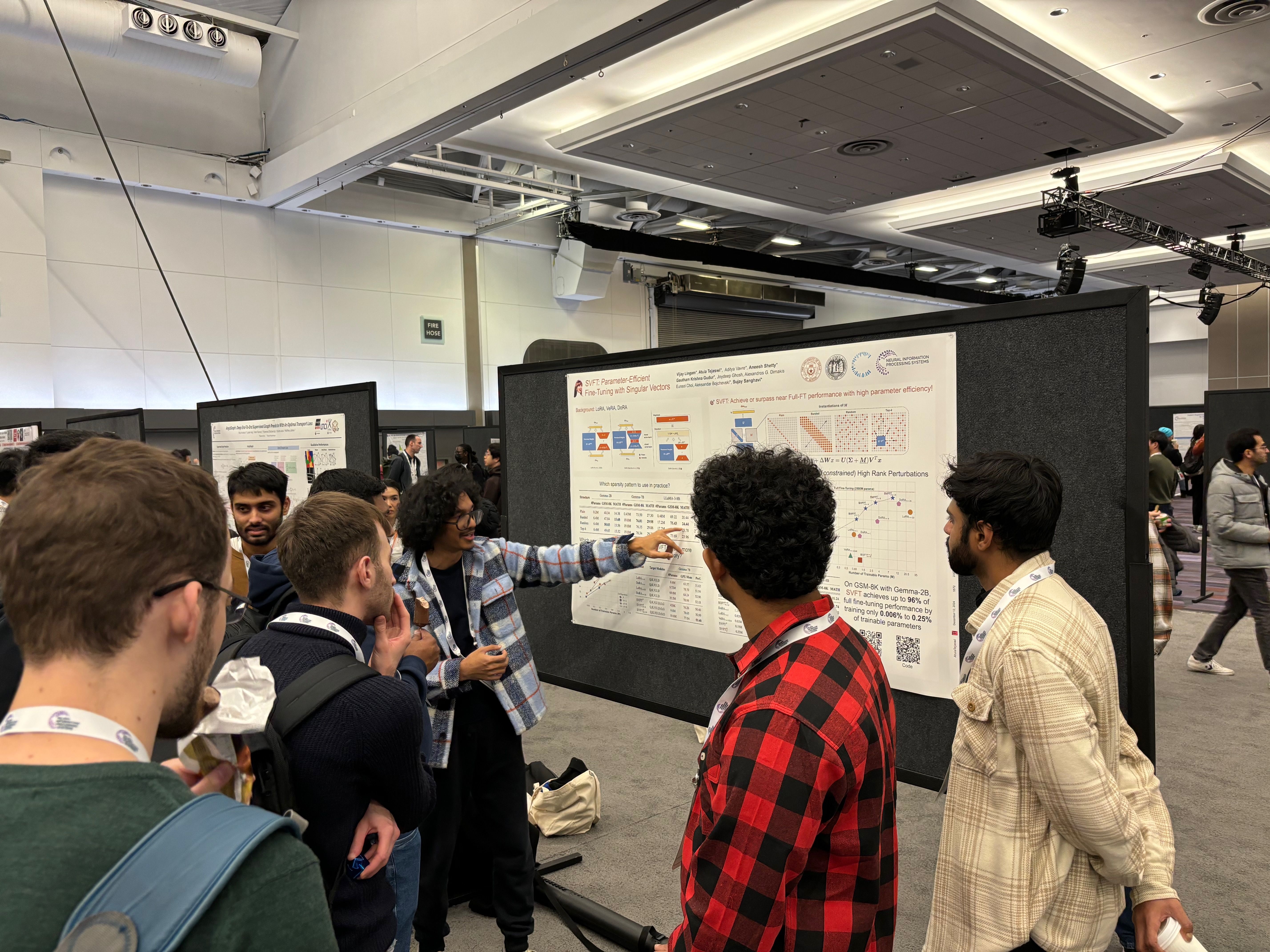

A few posters from Thursday’s sessions.

Xinyu Yuan presenting scCello

One of the busiest posters this session was for the xLSTM paper mentioned in Sepp Hochreiter’s invited talk.

Vijay Lingam presenting SVFT

Tomorrow will be the last day of the main conference track, as the workshops and competitions take place on Saturday and Sunday.

The East building poster hall

Lidong Zhou — Chief Scientist of Microsoft Asia Pacific as well as an ACM Fellow and IEEE Fellow — provided a systems perspective on AI and scaling.

After a brief intro recapping the industry’s progress since the most recent AI winter, Zhou contrasted the AI view on scaling (where it is not unusual to predict scaling laws out several orders of magnitude) with the systems view (“you can’t scale by throwing hardware at the problem forever”). Zhou stated that increasing scale comes with increasing complexity, and requires designing new systems and solving new problems.

The good news: improvements in AI can be used to improve systems themselves, in a symbiotic relationship.

Zhou laid out three core ambitions, each with its own grand challenge. In brief:

- Efficiency: better architectures and modelling approaches are possible (e.g. ternary instead of floating point inference), but to perform best they will need specialised hardware. It’s hard to build that hardware until the architectures are competitive.

- Trust: leveraging formal methods to enable AI to think and reason about systems, making it easier to build trusted and verified systems.

- Infusion: building “AI-infused” systems, harnessing new capabilities to improve systems at the design level.

To dig into some of the research highlights Zhou mentioned, see BitNet (ternary representations) and Verus (formal verification).

Kaggle’s talk included an announcement of an exciting new million-dollar prize for AI software engineering agents.

Kaggle CEO D. Sculley kicked off the session with a primer on empirical rigour, covering what happens when assumptions about data are violated in practice, and how to deal with concerns around leakage and contamination.

D. Sculley discussing empirical rigour

After reviewing pros and cons of static benchmarks and community leaderboards for comparing methods, he explained some ways in which Kaggle have mitigated these issues in their competitions.

One extremely effective mitigation: requiring researchers (or competition participants) to submit their models before the test data is generated. In the CAFA 5 Protein Function Prediction competition, for example, this meant gathering predictions before measurements for the test set proteins were made in the lab.

Sculley was joined on stage first by Carlos Jimenez and John Yang of SWE-bench (a benchmark for automated code-generation systems measuring their ability to resolve GitHub issues), and then by Databricks cofounder Andy Konwinski.

D. Sculley, Carlos Jimenez, John Yang, and Andy Konwinski.

After Jimenez and Yang explained SWE-bench, Konwinski posted a tweet announcing the million-dollar challenge live on-stage.

I’ll give $1M to the first open source AI that gets 90% on this sweet new contamination-free version of SWE-bench - kprize.ai.

Andy Konwinski’s live-tweet during the session

For more details on the prize, go to kprize.ai or the Kaggle competition page

Fei-Fei Li’s talk packed out the largest hall in the venue. Having revolutionised the field of computer vision through the ImageNet dataset, Li now detailed moving from seeing to doing, through improved spatial intelligence.

Li stated that robots today are still pretty brittle, and there remain significant gaps between experiments and real-world applications of robotics. She gave an overview of several of her lab’s research projects focused on data-driven work in robotic learning, including multi-sensory datasets like ObjectFolder, which catalogues sight/touch/sound data for large numbers of everyday objects, as well as some of her recent World Labs work on creating coherent 3D world models.

The talk ended on a positive note, with Li affirming her belief that AI will augment humans, rather than replacing them.

At Wednesday morning’s invited talk, Sepp Hochreiter — whose 1991 thesis introduced the LSTM architecture — spoke about Industrial AI.

Sepp Hochreiter

By comparison with historical technologies like the steam engine and the Haber-Bosch process for producing fertiliser, Hochreiter posited three phases of technology: basic development, scaling up, and industrialisation.

For AI research, under this paradigm, basic development included the invention of backpropagation and stochastic gradient descent as well as architectural innovations like CNNs and RNNs. The end of the scaling up phase is now approaching, with resources being focused on a few methods which scale well (ResNets and attention, mainly through the transformer architecture), as variety is compromised for the sake of scaling.

In the final phase — industrialisation — variety is once again introduced, as more application-specific techniques are developed. Hochreiter stated that Sutton’s bitter lesson is over, and that scaling is just one step on the road to useful AI. Echoing François Chollet and Yann LeCun’s views, he also described LLMs as “modern databases”, useful for knowledge representation but providing just one component of a larger and more complicated end system.

As for the next step, Hochreiter introduced some industrial applications of AI — in particular, approximate simulations of physical systems at a scale where numerical methods struggle, to replace traditional computational fluid dynamics or discrete element methods.

Lastly, he mentioned a new architecture developed by his lab, the xLSTM, which addresses some limitations of the original LSTM architecture while scaling better than transformers. More on the xLSTM in the poster session on Thursday evening.

xLSTM poster sessionDuring the three-day main conference track there will be six three-hour poster sessions, split across the East and West buildings. The first of these sessions was this morning.

With over 4,000 main track papers and close to 500 datasets and benchmarks papers to get through, each poster session hosts hundreds of papers.

Poster Session 1 West

There are 16 official competitions at NeurIPS 2024, across physics and scientific computing, generative AI and LLMs, multi-agent systems and RL, signal reconstruction and enhancement, and responsible AI and security.

Read our full roundup →

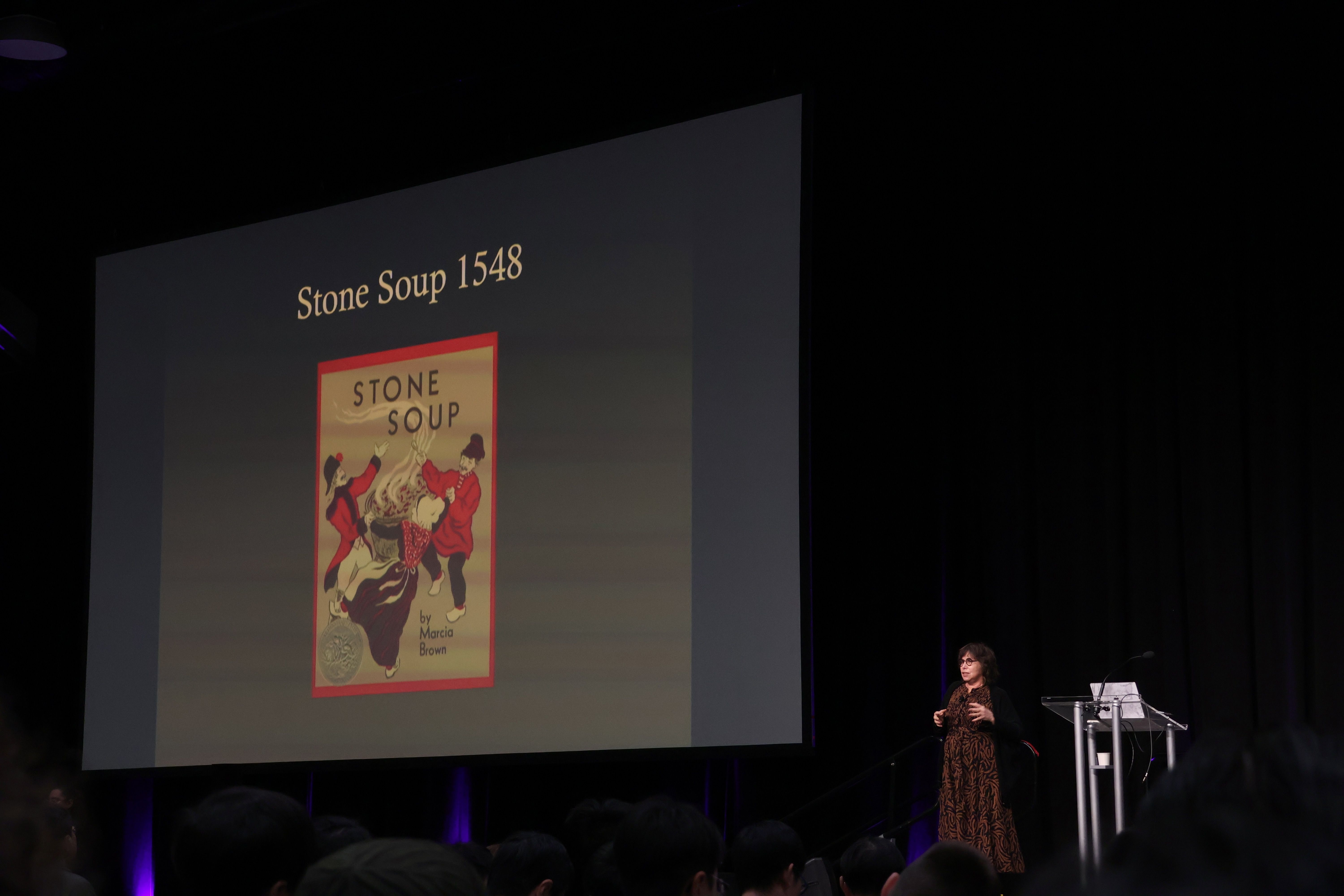

Alison Gopnik started her talk, the first of seven invited talks at this year’s conference, with reference to a few classic folk tales and modern science fiction stories.

In the Stone Soup folk tale, strangers in a village proclaim they’re making “stone soup”, and the villagers pitch in with additional ingredients. In the end, the soup is composed of everything but stones.

Gopnik drew a comparison to claims of AGI achieved via gradient descent and next-token-prediction that ignore the contributions of high-quality data, human labelling, prompt engineering, and more.

In fact, Gopnik questioned the concept of “general” intelligence, positing that several aspects of intelligence — exploitation, exploration, and transmission — are in tension with one another, and any instantiation of intelligence makes trade-offs between these aspects.

The talk contrasted AI approaches to development in children, making the case for empowerment-driven learning: maximising mutual information between actions and outcomes, and maximising diversity of actions.

Gopnik’s intrinsic motivation paper at NeurIPS last year examined this by comparing RL agents’ exploration strategies to those of children in an open-ended minecraft environment.

In their opening remarks, the conference chairs noted NeurIPS’ continuing growth.

This year’s conference hit another new record for registered participants, and submissions to the (relatively new) datasets & benchmarks track once again doubled year-on-year.

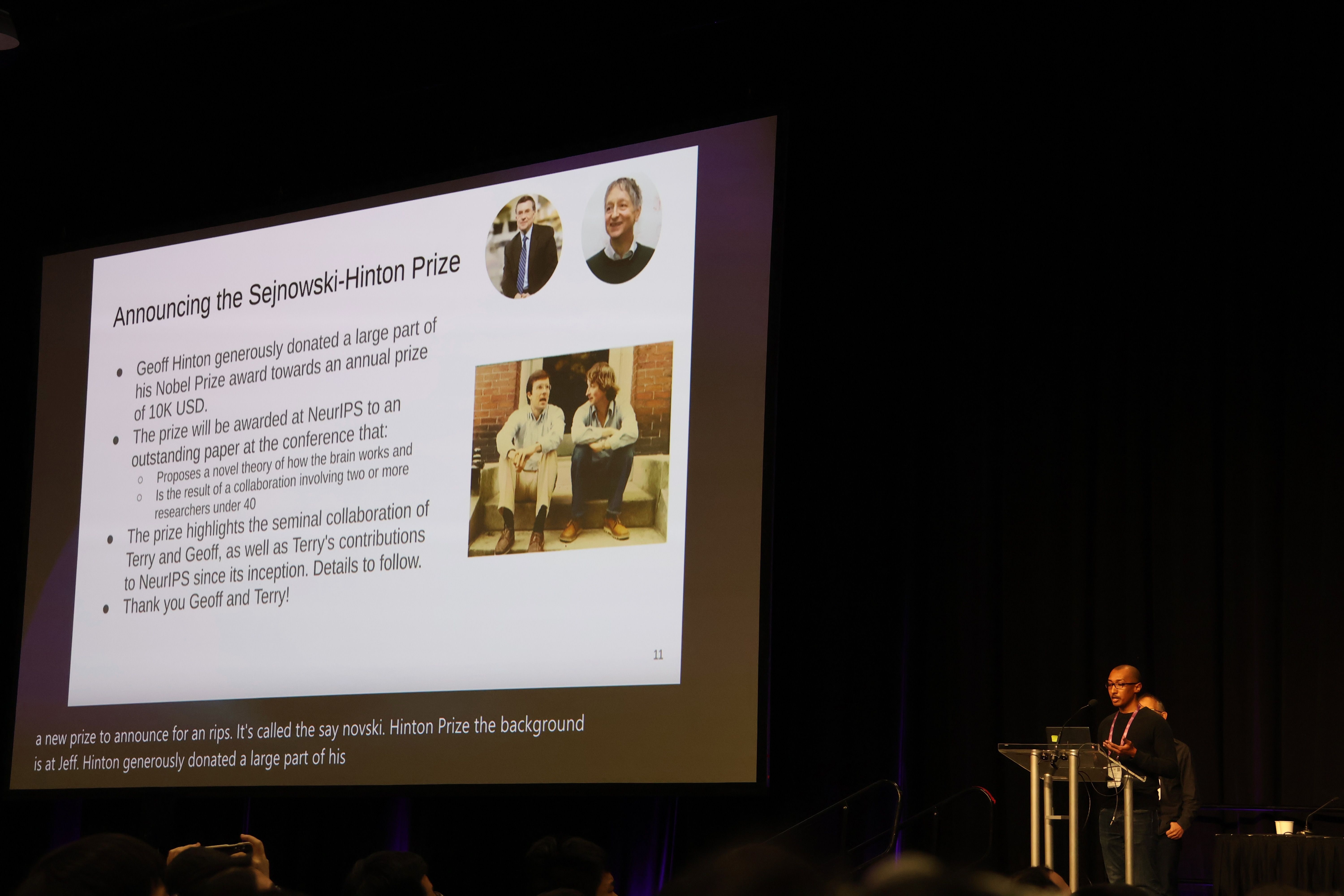

A new prize was announced: the Sejnowski-Hinton Prize, a $10k annual prize to an outstanding NeurIPS paper proposing a novel theory of how the brain works. The prize is funded by a donation from Geoffrey Hinton.

General Chair Lester Mackey announcing the Sejnowski-Hinton Prize

This year’s best paper awards:

- Visual Autoregressive Modeling: Scalable Image Generation via Next-Scale Prediction by Keyu Tian, Yi Jiang, Zehuan Yuan, Bingyue Peng, and Liwei Wang.

- Stochastic Taylor Derivative Estimator: Efficient amortization for arbitrary differential operators by Zekun Shi, Zheyuan Hu, Min Lin, and Kenji Kawaguchi.

Runner-up awards:

-

Not All Tokens Are What You Need for Pretraining by Zhenghao Lin, Zhibin Gou, Yeyun Gong, Xiao Liu, Yelong Shen, Ruochen Xu, Chen Lin, Yujiu Yang, Jian Jiao, Nan Duan, and Weizhu Chen.

-

Guiding a Diffusion Model with a Bad Version of Itself by Tero Karras, Miika Aittala, Tuomas Kynkäänniemi, Jaakko Lehtinen, Timo Aila, and Samuli Laine.

Program Chair Chen Zhang announcing the best paper awards

This year’s datasets and benchmarks track best paper award goes to The PRISM Alignment Dataset: What Participatory, Representative and Individualised Human Feedback Reveals About the Subjective and Multicultural Alignment of Large Language Models by Hannah Rose Kirk, Alexander Whitefield, Paul Rottger, Andrew M. Bean, Katerina Margatina, Rafael Mosquera-Gomez, Juan Ciro, Max Bartolo, Adina Williams, He He, Bertie Vidgen, and Scott Hale.

The sponsor exhibit hall opened today, and will be open until 5pm on Thursday. Major sponsors include the big tech companies and several quant trading firms (full sponsor list here).

The sponsor exhibit hall

Several sponsor booths had live robot demos.

Matic's household robot

Unitree's G1 humanoid robot

Booths offering barista-made coffee were particularly popular! These include G-Research and Recogni. Jump Trading combined the best of both worlds with their robot barista.

Jump Trading's robot barista

So you’ve trained an LLM — now what? The meta-generation algorithms tutorial introduced various approaches for improving performance by scaling test-time compute.

It started with a brief introduction to strategies for generating strings of tokens from LLMs, through both the lenses of decoding as optimisation and optimisation as sampling.

It then introduced meta-generation as a method that:

- Takes advantage of external information during generation

- Calls the generator more than once to search for good sequences.

Meta-generation methods can be sequential (like chain-of-thought), parallel (like rejection sampling), search-based, and can incorporate external information like feedback from a verifier when generating code.

Sean Welleck giving an overview of meta-generation algorithms

Building on the plethora of inference-time efficiency improvements like speculative decoding and quantisation, efficiency concerns for meta-generation strategies vary based on the specifics of the meta-generation strategy.

Efficient meta-generation strategies are those that are amenable to:

- Parallelisation, allowing for batched sampling of trajectories.

- Prefix sharing, allowing re-use of key-value caches across multiple model calls.

Hailey Schoelkopf presenting efficient generation

The tutorial finished with a wide-ranging panel discussion with topics including OpenAI’s o1 model, the extent to which additional training-time compute can obviate the need for sophisticated inference-time methods, the tractability of meta-generation on domains lacking robust external verification, and the role of academic labs in running compute-constrained experiments.

The role of hardware was also discussed, with one panelist suggesting that more inference-targeted compute (vs hardware optimised for training) would be beneficial in making efficient and large-scale meta-generation easier.

Moderator: Ilia Kulikov. Panelists: Nouha Dziri, Beidi Chen, Rishabh Agarwal, Jakob Foerster, and Noam Brown.

The slides are available on the tutorial website, as is the TMLR Survey Paper on which the tutorial is based.

NeurIPS 2024 begins tomorrow, a day later than originally planned. The conference was rescheduled due to limited hotel availability on Sunday night, when Vancouver hosted the final concert of Taylor Swift’s Eras tour.

Early arrivals collecting their badges

This year’s venue, the Vancouver Convention Center, is spread across two buildings which are linked by an underground walkway. The East building, the older of the two, juts out into Vancouver Harbour.

The East building

The more modern West building, added in 2009, is fully covered by a green roof and borders the seaplane terminal.

The West building