NeurIPS 2024 was, once again, the largest NeurIPS ever. In fact, it was possibly the largest in-person academic AI conference ever — with 16,777 in-person registered participants, 4,037 main track papers, and 460 datasets and benchmarks papers.

Now that the conference has wrapped up, we’ve summarised some of the themes we spotted at NeurIPS this year in Vancouver.

In our highlights from last year’s NeurIPS we called out efficiency, data curation, openness, and benchmarking as key topics, while acknowledging the focus on LLMs and diffusion models throughout the conference.

All of these topics remained prominent at this year’s conference, though the focus has shifted somewhat.

Inference-time compute & efficiency

NeurIPS 2023’s discussions on efficiency were mostly about leveraging smaller, or less resource-intensive, models. Specific highlights included quantisation, data curation, adapters, and other approaches that reduced resources needed for training, fine-tuning, or inference.

In 2024, the core focus appears to have shifted to expending extra resources at inference time (also referred to as “test time”) to improve generation quality. While there is still a healthy field around efficient models for the purpose of local deployment on edge devices, there also seemed to be a general acknowledgement that returns from scaling pre-training are diminishing, and that the next big improvements will come from scaling inference-time compute.

Inference-time compute was a recurring theme, discussed in depth at the meta-generation tutorial, highlighted in Ilya Sutskever’s Test of Time Award talk and Noam Brown’s Math-AI workshop talk, and quantified to some extent in Sean Welleck’s talk on inference scaling laws, among other sessions.

OpenAI’s o1, Qwen’s QwQ, and Google DeepMind’s AlphaProof have shown that additional inference-time compute for LLMs can be leveraged for better performance on certain tasks.

In some domains, scaling inference-time compute by a factor of 15 has the same impact on final performance as scaling train-time compute by a factor of 10.

This could have interesting economic implications, alluded to by Noam Brown (of OpenAI/o1) during his talk on Saturday. In discussing inference scaling laws, Brown mentioned that in some domains, scaling inference-time compute by a factor of 15 has the same impact on final performance as scaling train-time compute by a factor of 10, and that for important problems (e.g. finding a proof of the Riemann hypothesis, improving solar panel technology, or discovering better drugs) this inference cost is easy to justify. For these problems, the cost society is willing to pay per solution is high.

It’s not clear, however, that this applies to other domains where LLMs are currently being deployed or tested. In Brown’s hypothetical example, increasing o1 query cost by a factor of 15 could make a meaningful difference for low-value high-volume queries. This is a departure from the scaling trends in LLMs so far, where training costs have been amortised across users and over all queries of a model.

Inference-time scaling does not have this amortisation property, and so does not benefit from economics of scale to the same extent. Granted, there are still benefits to be had from batching queries, and for locally-running models it might be possible to have end-users implicitly pay the inference cost in the form of increased power draw or reduced battery life, but if this is the primary scaling direction in the foreseeable future, then the near-term high-volume economics could look very different.

A shift to inference-time scaling also has potential implications for hardware providers. NeurIPS this year was sponsored by several such companies (including AMD, Cerebras, Qualcomm, Recogni, and SambaNova), and many of these firms focused on inference performance in their marketing messages. Some even build hardware that’s specifically optimised for inference — as do providers like Groq, Etched, and Positron, which did not have a booth at NeurIPS this year.

For instance, Recogni’s chip uses a logarithmic number system, allowing highly efficient inference for a variety of model architectures, while being unsuitable for training. Etched’s Sohu chip has an even narrower specialisation, only supporting inference, and only for transformer-based architectures, allowing it to achieve extremely high throughput in this particular use case. Positron’s systems make a similar trade-off, but leverage FPGAs rather than fully custom ASIC chips. Groq’s approach, also designed for high-throughput and high-efficiency inference, parallelises inference across a large array of its LPU chips, each of which individually has a small allocation of only very fast memory.

These are in contrast to NVIDIA’s general-purpose GPUs, which generally support both training and inference workloads.

Sambanova and Cerebras booths at NeurIPS 2024

Some of these specialised inference chips are already available, while others are still in development and will ship over the next year or two.

Benchmarks and real-world performance

Last year we noted concerns around leakage and Goodhart’s Law with static benchmarks. Today there is still a marked difference between models’ scores on static benchmarks and their usefulness for real-world applications, and it remains to be seen how much of this gap can be bridged by application-specific product development, and how much is caused by inherent limitations of current models.

One researcher working on medical applications told us frontier research is currently “too expensive for academia” and that “a lot of the work right now [is] chasing the hype. I don’t think many of the papers will stand the test of time”.

John Konwinski’s partnership with Kaggle to launch the K Prize, a “contamination-free” version of SWE-Bench, is one of several initiatives aiming to address the shortcomings of static benchmarks. With a $1m prize for the first AI model that can reliably resolve over 90% of future GitHub issues, this will provide a great real-world test of state-of-the-art models on software development tasks.

The ARC Prize offers another valuable approach, with participants evaluated on a fully private test set. Despite impressive progress, performance on this dataset has not saturated at the same rate as static benchmarks.

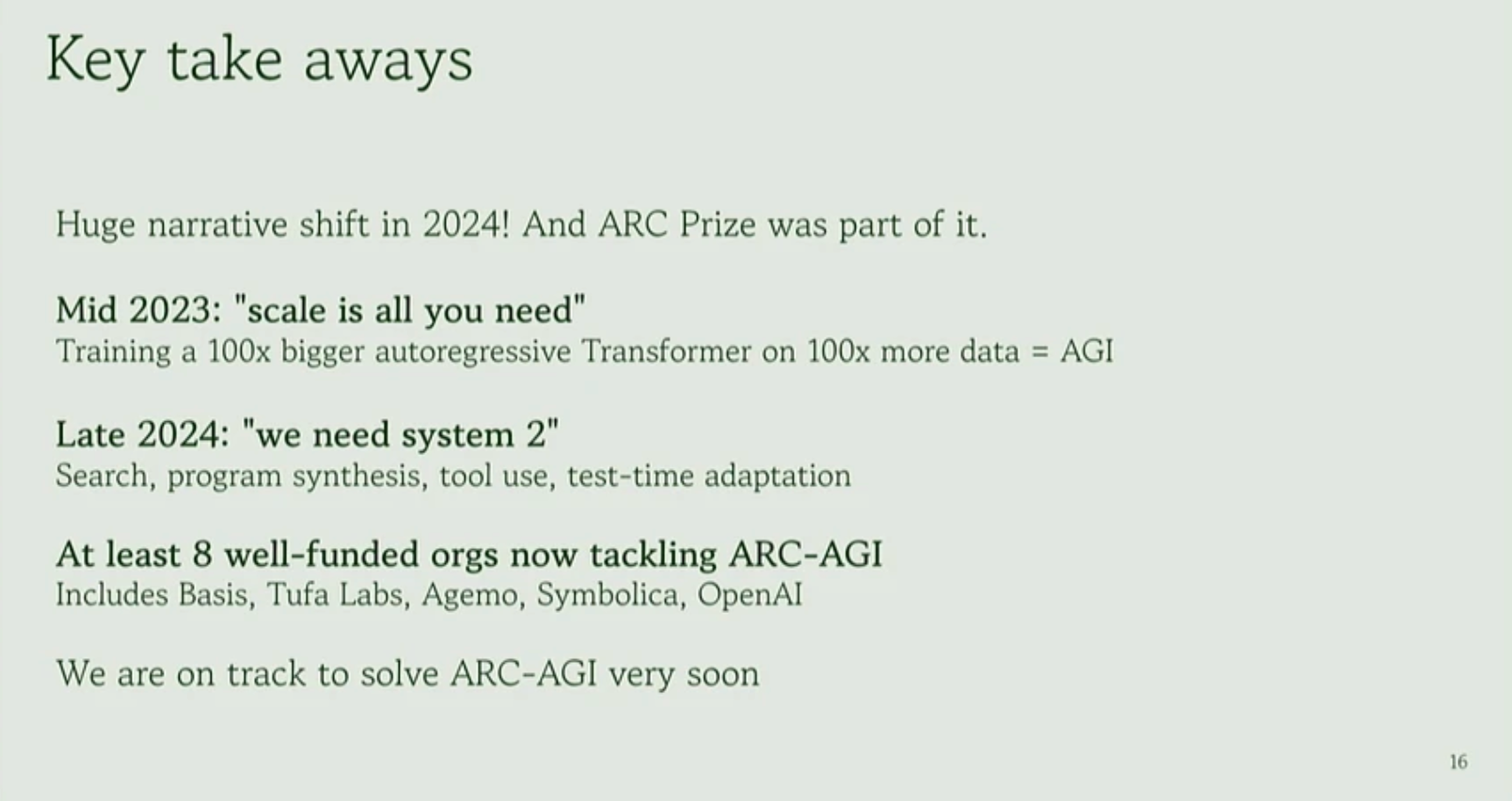

In his discussion of the progress on the ARC Prize on Sunday, François Chollet said that the dominant narrative in the larger ML community had changed from “scale is all you need” in mid-2023 to “we need system 2” by late 2024.

Slide from François Chollet's presentation at the 'System 2 Reasoning At Scale' workshop

Chollet spoke about how this challenge was specifically designed to resist memorisation, and discussed the varied approaches taken by leading participants — largely a mixture of program synthesis and test-time training.

In his talk, Chollet also stated he expected significant progress on the current ARC dataset soon, likely a nod to OpenAI’s o3 model, announced a few days later, which scored significantly higher than any other model on ARC prize’s public and “semi-private” leaderboard. The use of additional inference-time compute is clear from the o3 release blog post, with the model spending around $20 per ARC task and scoring 75.7% in a “high efficiency” mode, while using over 100x as much inference compute and achieving 87.5% in its “low-efficiency” mode.

Beyond attention-based autoregressive language models

The neuro-symbolic approach to program synthesis which was popular among leading open ARC challenge solutions — using an LLM to guide search through program space — was also seen elsewhere at the conference. In applications such as mathematics and programming, there were several approaches for partnering LLMs with verifiers to iteratively build proofs or programs.

This was part of a larger research trend of going beyond directly using pre-trained foundation language models to solve problems, and instead using them as just one component in a larger system which also relies on older, well-established techniques (like forms of search or evolutionary processes) to improve upon prior work in a significant way.

This trend was also seen among startups presenting their visions, with organisations like Sakana presenting their multi-agent and evolution-based approaches, and Basis giving an overview of their multi-faceted approach.

While transformers were still clearly the most popular core architecture for language models, alternatives appear to be gaining momentum. Sepp Hochreiter gave a brief overview of the xLSTM architecture in his invited talk, and there were several papers building on state-space models like Mamba.

The general consensus seemed to be that while significant developments in the next few years were unlikely to result from more pre-training, there remained widespread optimism about the field as a whole and it felt clear that researchers had no shortage of ideas for other methods to apply to the powerful pre-trained models that exist today.