This will be the last of our daily posts from NeurIPS this year. We’ll follow up next week with an analysis of the competitions track, which takes place on Friday and Saturday, as well as some overall highlights of the conference. Sign up to our mailing list to get notified when these are published.

Systems for Foundation Models, and Foundation Models for Systems

Christopher Ré’s talk on Systems for Foundation Models and Foundation Models for Systems started off with an example using Foundation Models for data cleaning in a few-shot setting.

He gave an overview of hardware accelerators and their application to Attention-based architectures. As accelerators get faster, memory often becomes a bottleneck, at which point memory locality becomes critical. This problem looks a lot like the problem of reducing I/O in database engines, and the FlashAttention architecture takes some insights from that community to speed up Transformers.

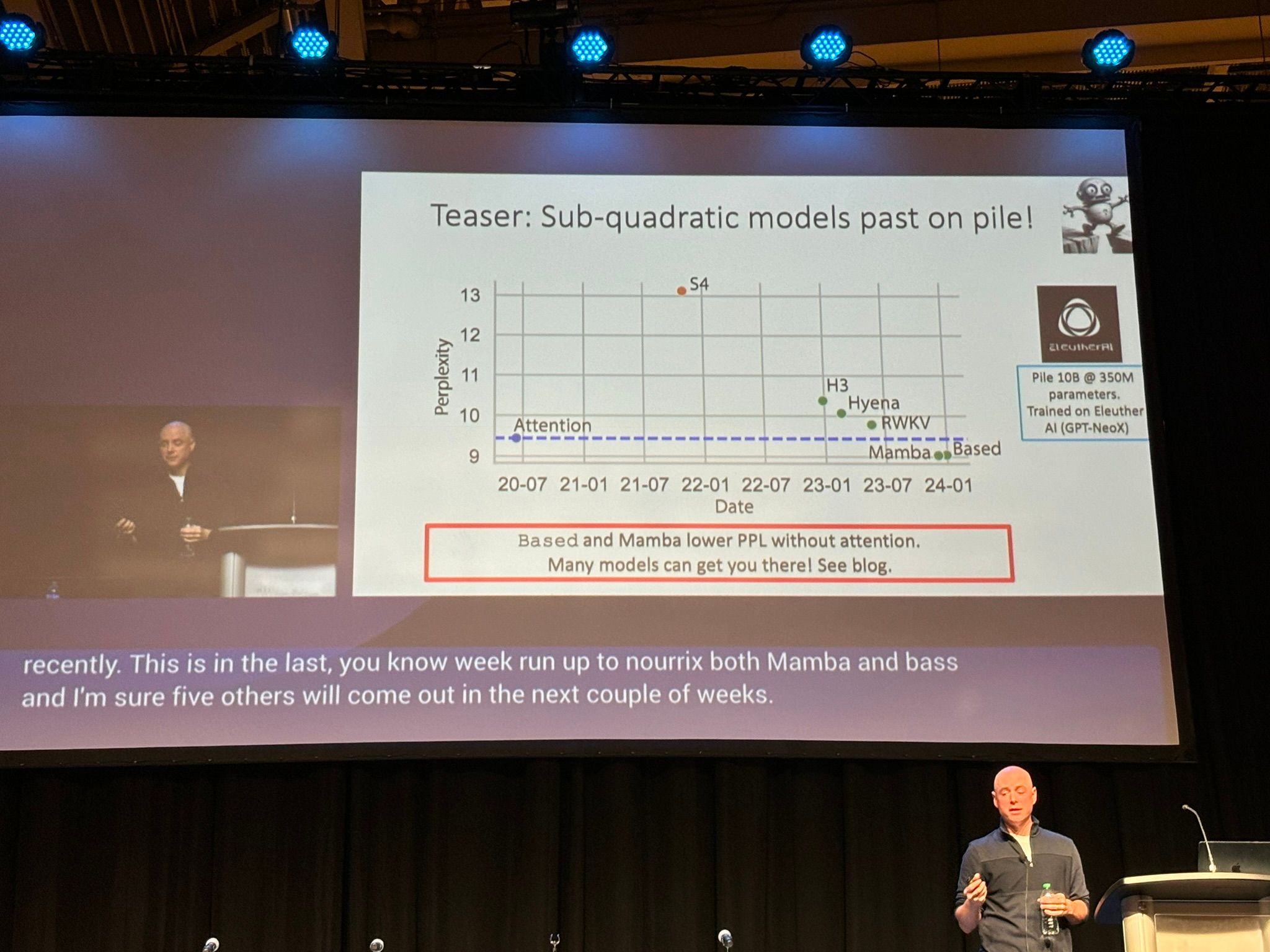

Another alternative is to remove Attention entirely. This is the direction taken by state space models such as S4. Early versions of these models outperformed Attention-based models on certain tasks, but significantly underperformed on NLP tasks, as measured by perplexity on the Pile for a model of at most 350M parameters.

Excitingly, in recent weeks, newer state space models like Mamba have crossed the threshold, and there’s a general feeling that more promising state-space models are still to come.

For more on state space models, see Christopher Ré’s lab’s blog.

Online Reinforcement Learning in Digital Health Interventions

Susan Murphy presented some interesting real-world case studies of reinforcement learning used in digital health interventions — a physical activity coach app, and an oral health coach app.

Rolling these out in practice, within the boundaries imposed by a clinical trial with real human users, required constraints on the action space and exploration procedures, as well as careful planning to ensure meaningful results given the relatively small sample size. To mitigate these issues, extensive mini-experiments were run in a simulated test-bed, in advance of the actual trial.

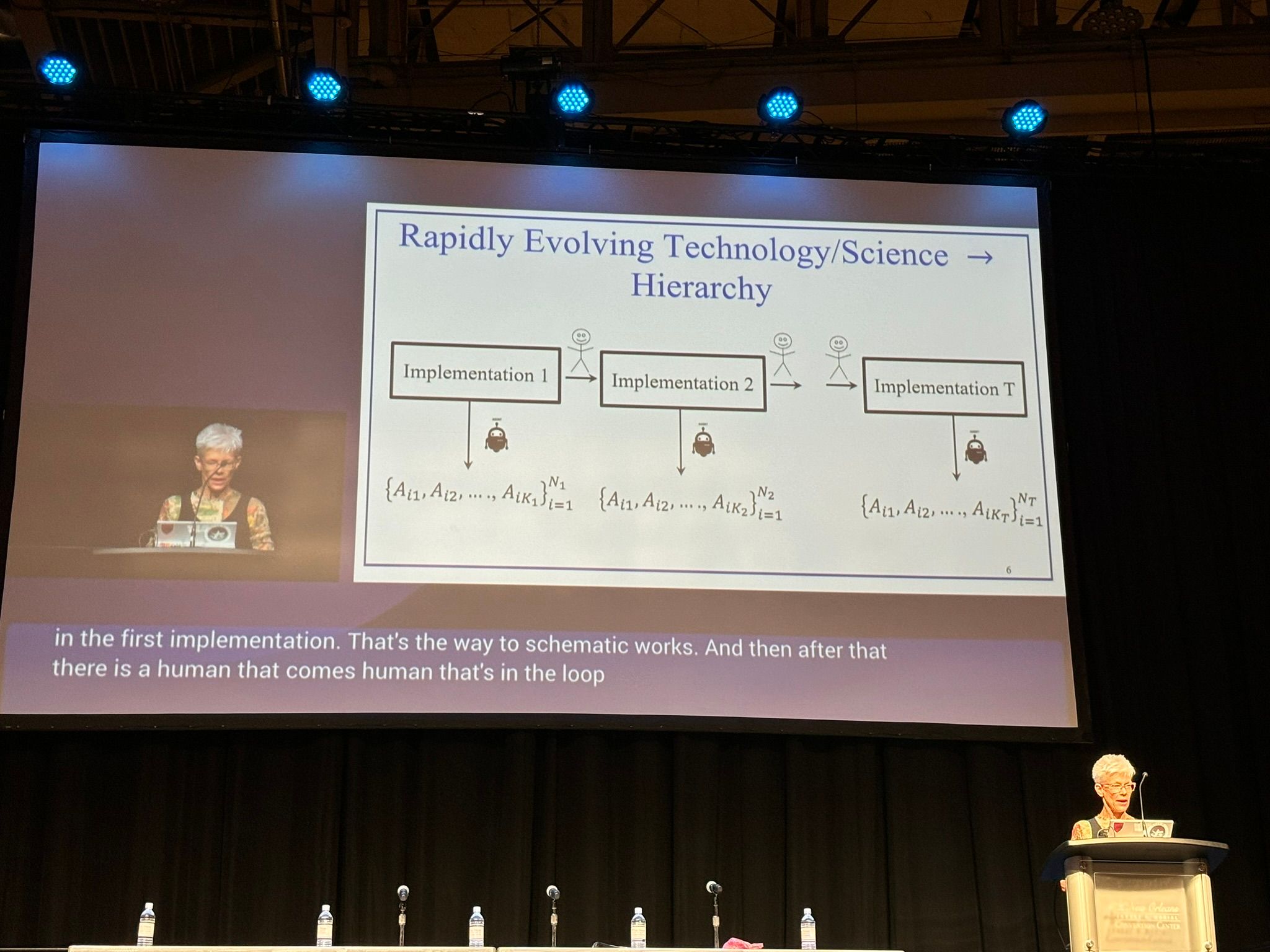

Another difficulty faced in this work is the human-in-the-loop step further up in the hierarchy, when the procedure itself is reviewed from one trial to the next. Not only does this need to contend with distribution shift, but often also more fundamental changes, like an expanded observation space when new sensors or other inputs are added.

Creative AI

New at NeurIPS this year is the Creative AI track.

One poster in this track today was the WHOOPS! benchmark, a new dataset comprised of images that violate common-sense expectations, generated by human designers entering carefully-chosen prompts into image generation tools. Examples include Albert Einstein using a smartphone, a candle burning in a closed bottle, and a wolf howling at the sun. Check out the WHOOPS! benchmark website) for more examples.

Nitzan Bitton-Guetta presenting WHOOPS!

Posters

With 3,540 papers at NeurIPS this year, there were a lot of poster sessions. One poster in this afternoon’s session presented QuIP, a 2-bit LLM quantisation technique. As another sign of how quickly the field is moving this year, several of the QuIP authors announced QuIP# the week before the conference, further improving on QuIP and promising 2-bit quantised models competitive with 16-bit models, as well as releasing a full suite of 2-bit Llama 1 and Llama 2 models quantised using QuIP#.

Jerry Chee presenting the QuIP poster

Read our summary of the competition track that took place over the last two days of the conference here, and keep an eye on our NeurIPS 2023 page for more updates.