Just a quick update, covering today’s invited talks. One more daily update to follow tomorrow, and then a competitions write-up sometime next week.

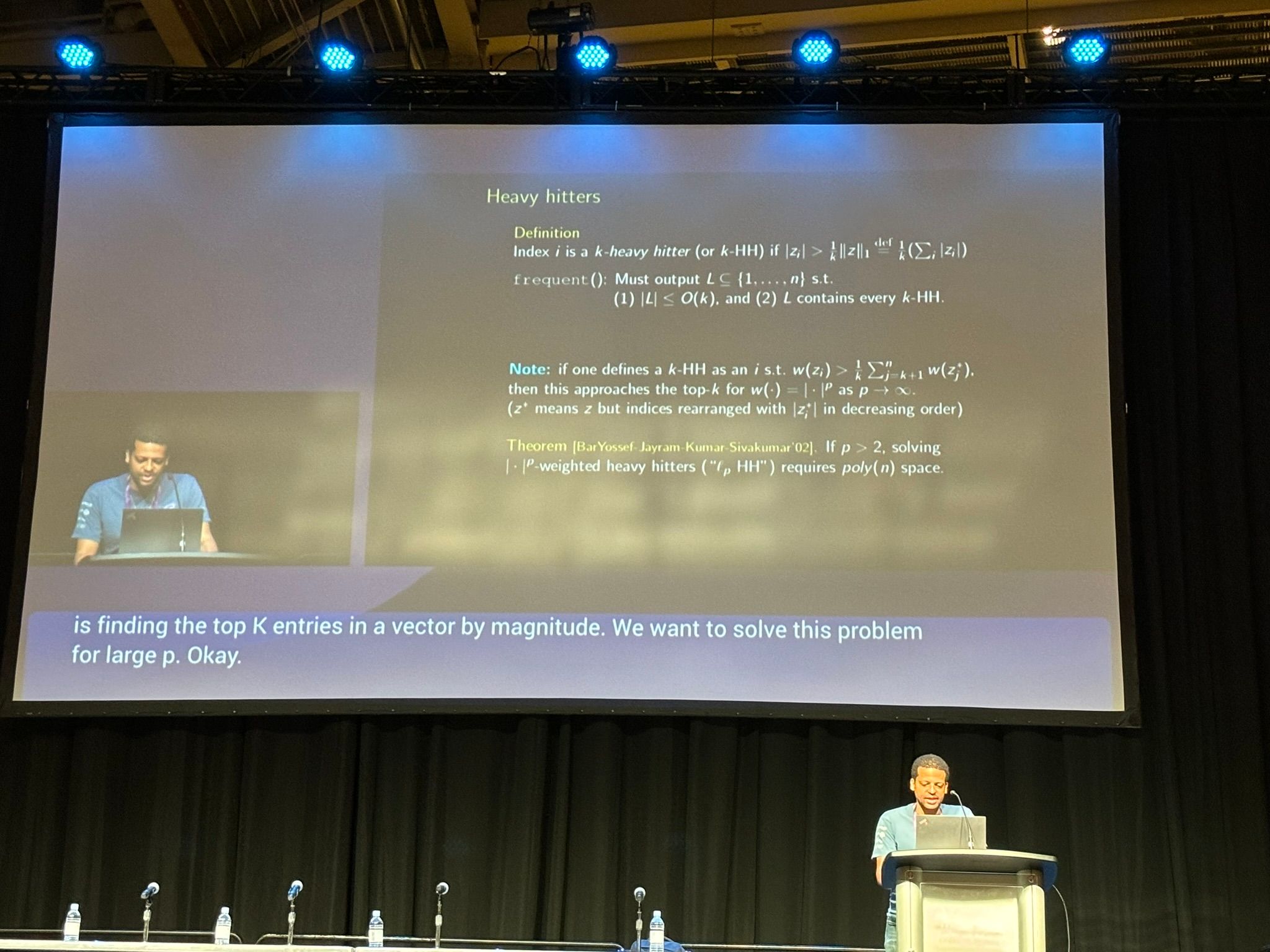

Sketching: core tools, learning-augmentation, and adaptive robustness

Jelany Nelson presented an introduction to Sketching, a variety of algorithms and data structures that compress data in way that is lossy but still allows for answering specific questions about the data with particular guarantees.

One simple motivating example is the desire to keep track of the k most frequent items in a stream of data, with memory usage scaling sublinearly in the total number of unique items in the stream. This can be solved using data structures like CountSketch with low memory usage, great update time, low failure probability, but slow query time. More recent methods improve on this with better asymptotic performance but bad constant factors.

These data structures also turn out to be useful for other problems, like speeding up interior point methods in linear programming, and potential improvements to the standard Attention mechanism in deep learning (as discussed in a recent paper introducing HyperAttention).

Jelany introduced some other tools like random projections and matrix sketching, shared an intuition of the hashing tricks and other methods that tend to be useful in this field, and gave a brief overview of the relevance to learning augmentation and adaptive robustness.

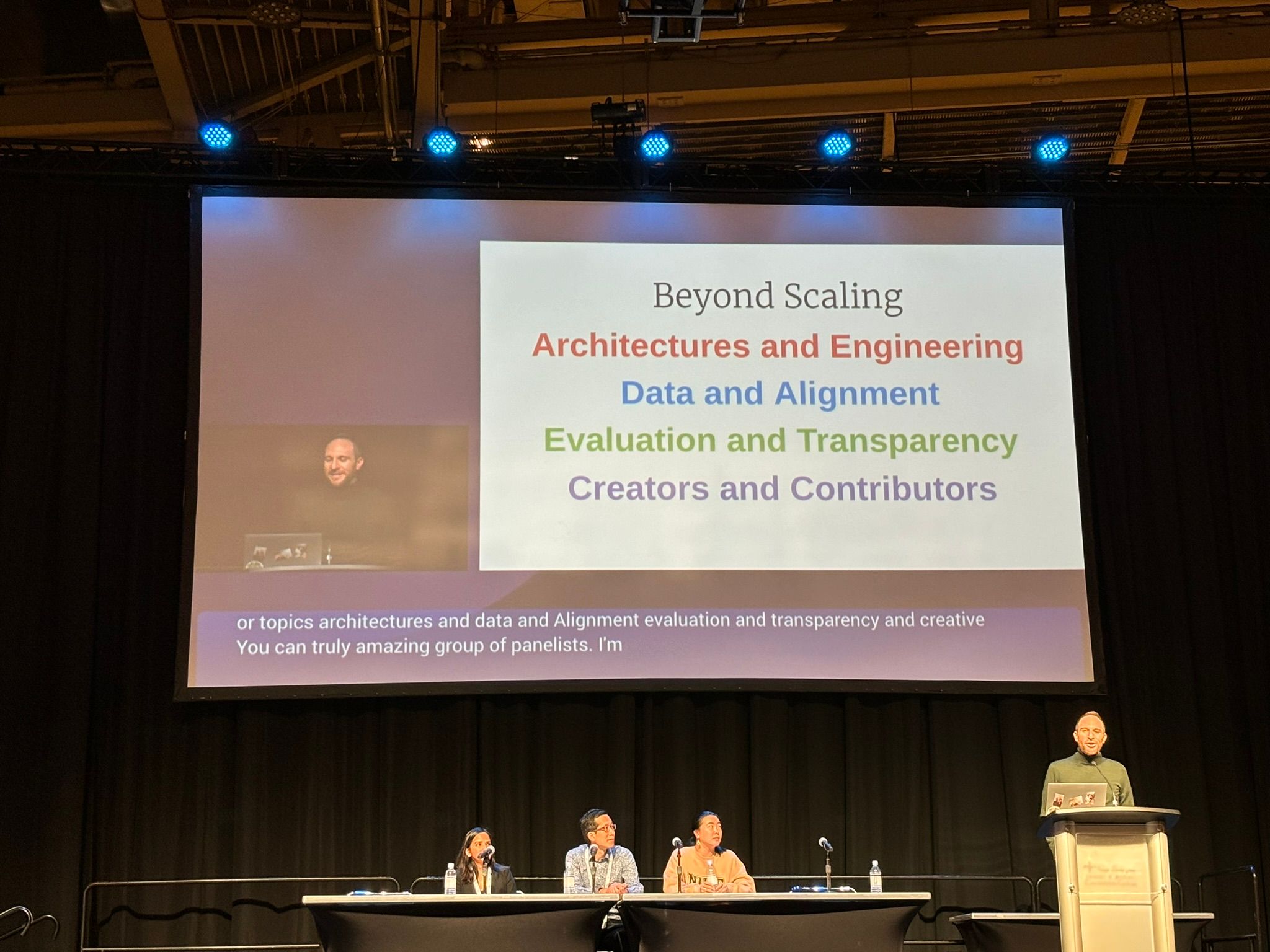

LLMs: Beyond Scaling

The afternoon panel on Beyond Scaling was moderated by Sasha Rush, and featured three speakers at the cutting edge of LLMs: Aakanksha Chowdhery, Angela Fan, and Percy Liang.

The discussion was dense with insights about LLM development. A few highlights:

- Operating at large scale makes everything harder, and comes with problems that aren’t encountered in single-GPU or single-pod training. Ensuring resilience, maximising goodput, and achieving high utilisation are all much more difficult.

- Non-open foundation models (with respect to training data/training code) present a fundamental problem for evaluation: how can you interpret a model’s performance on a benchmark if you don’t know what’s in the training data?

- We should continue to bridge the gap between annotators and users — no one is better placed to express preferences over alternative generations than users themselves.

- There will be room for both cloud-based and local LLMs. On-device LLMs are great at some tasks, and it’s useful to be able to go to cloud-based ones where necessary.

One useful resource that was mentioned is Angela Fan’s video on Llama 2.

Read our blog from the main conference’s third day here, and keep an eye on our NeurIPS 2023 page for more updates.