The ICML 2025 test of time award (for an impactful paper from ICML 2015) went to Sergey Ioffe and Christian Szegedy for their paper which introduced the Batch Normalization (“batch norm”) procedure, developed while the authors were both at Google.

Batch norm normalises inputs to intermediate neural net layers — i.e., transforms them so each feature has zero mean and unit variance. At train time, normalisation is done on a per-minibatch basis. At inference time, normalisation is done using the population statistics of the training set.

The ICML 2015 Batch Normalization paper

After receiving the award, Sergey Ioffe gave a talk, starting with an explanation of the initial motivation behind the paper: figuring out why ReLUs worked better than sigmoids as activation functions, and what it would take for sigmoids to work well.

Impact

While the paper did end up enabling the more widespread use of sigmoid activation functions1, the impact was more profound: batch norm enabled significantly faster and more efficient training, and was followed by other normalisation procedures including group norm, weight norm, and instance norm, as well as layer norm, which was a key component of the 2017 Transformer architecture.

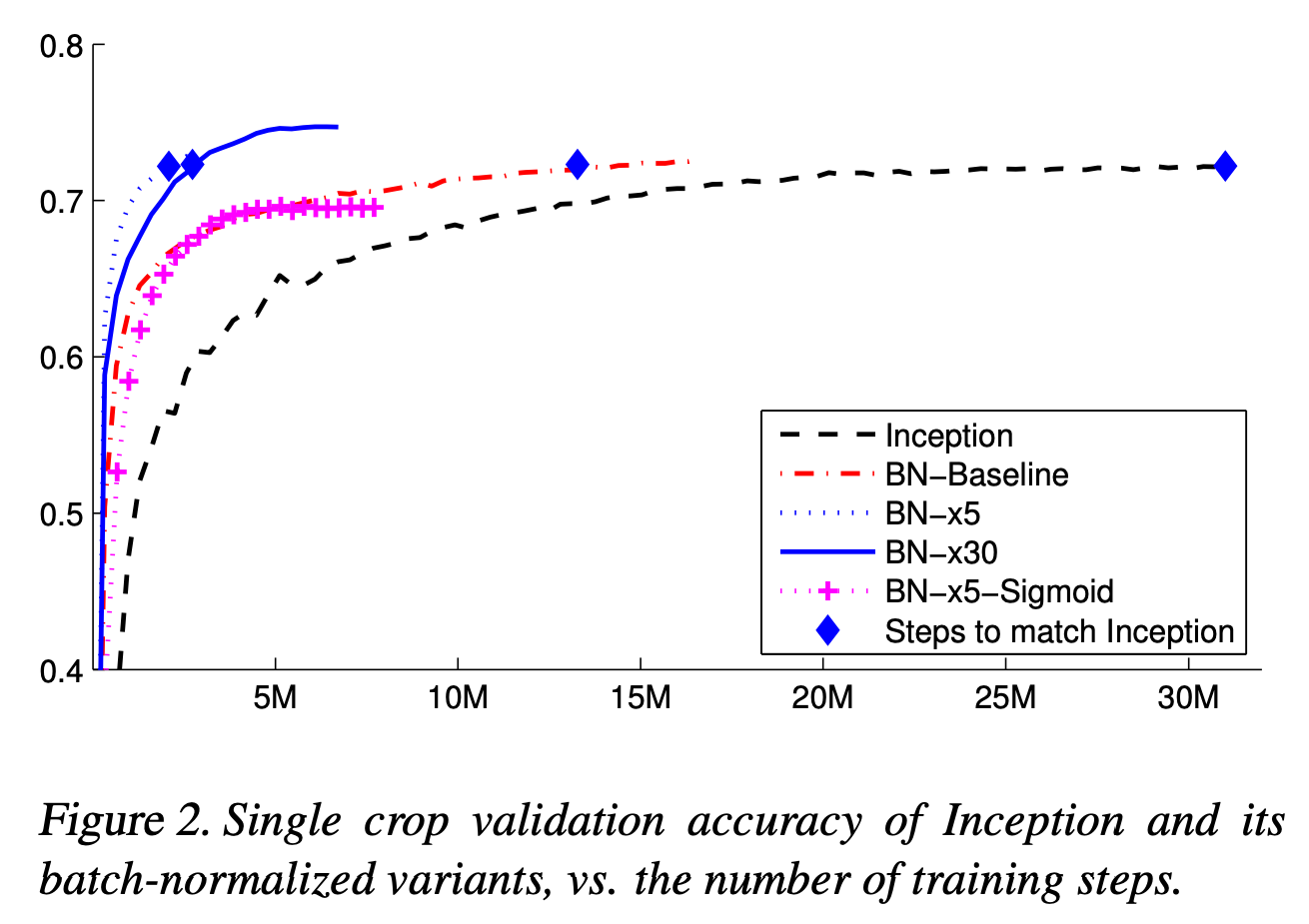

Figure 2 in the batch norm paper shows a 14-fold training speed increase over the baseline Inception model, which took around 30 days to train on a cluster of CPUs. This speedup was achieved by adding batch norm and increasing the learning rate. (the use of a higher learning rate was enabled by the addition of batch norm)

The 14x training efficiency increase from batch norm + higher learning rate

Interpretation

This talk provided an interesting reminder of the empirical nature of deep learning research, as Ioffe described how the current understanding of why batch norm works is very different to the initial explanation of its mechanics.

He mentioned that while the 2015 paper attributed the success of batch norm to a reduction in “internal covariate shift”, later research showed that the improvements are due to a smoothing of the optimisation landscape. He pointed to the 2018 NeurIPS paper How Does Batch Normalization Help Optimization? by Santurkar et al for an explanation of this phenomenon.

Scale Invariance

Ioffe also presented some analysis with implications for scale-invariant models more generally.

Batch norm causes scale-invariance that results in implicit learning-rate scheduling, as the relative magnitude of the gradient gets smaller when training progresses and weights become larger.

This holds for general scale-invariant models, and there is an interaction between normalisation, weight decay, and learning rate scheduling. For more discussion of this phenomenon, Ioffe mentioned Layer Normalization (Ba et al, 2016) and L2 Regularization versus Batch and Weight Normalization (van Laarhoven, 2017).

Interactions

Ioffe ended the talk with a discussion of how, in batch norm, calculating statistics on the minibatch level creates additional interactions between training examples.

He pointed out that, while this can have undesirable side-effects in some cases, some more recent works make use of per-example interactions to improve training (notably SimCLR by Chen et al, 2020 and LongLlama by Tworkowski et al, 2023), and speculated that this could be a key mechanism employed to improve future models.

Footnotes

-

As per the paper: “this enables the sigmoid nonlinearities to more easily stay in their non-saturated regimes, which is crucial for training deep sigmoid networks but has traditionally been hard to accomplish” ↩