ICML 2025 wrapped up with 33 workshops spread across two days. Workshops allow researchers to share newer work in a less formal environment than the main conference and each workshop focuses on a specific domain or area of research.

Based on anticipated attendance numbers in the conference app, the three most popular workshops across the two days were Multi-Agent Systems in the Era of Foundation Models: Opportunities, Challenges and Futures, Exploration in AI Today (EXAIT), and Foundation Models for Structured Data (FMSD).

Below are a few brief highlights from two of the workshops.

Foundation Models for Structured Data

Workshop on Foundation Models for Structured DataThis was the first ICML workshop on Foundation Models for Structured Data. It covered a broad range of topics related to pre-trained models for tabular and time-series data.

There was a generally-shared view that foundation models for structured data are still in their infancy, with many promising directions for further work.

Andrew Gordon Wilson’s talk (“A Universal Approach to Model Construction”) included some advice on model selection (embrace a highly expressive hypothesis space in combination with a compression bias). He questioned the view that deep learning is ‘special’ compared to other machine learning approaches, and suggested that the success of overparameterisation observed in phenomena like double descent is not unique to deep learning.

For more on this view, see his ICML 2025 position paper Position: Deep Learning is Not So Mysterious or Different.

Andrew Gordon Wilson's position paper

Josh Gardner’s talk (“Toward the GPT-3 Moment for Tabular Data Models”) reviewed the progress made in the first three GPT models, and attributed their success to three main factors (large-scale data, reliable benchmarks, and scalability) before going on to evaluate the state of these factors for tabular foundation models.

The talk noted that there’s no equivalent to CommonCrawl for tabular data (yet), and that much of the large-scale tabular data is synthetic (for example, TabPFN is entirely trained on synthetic data). Currently most benchmarks focus on “single-table” prediction, and there is a need for more tabular benchmarks aimed at foundation modelling or few-shot/in-context learning.

He also highlighted some misconceptions, coining the phrase “The Token Fallacy,” referring to the common belief that “models that tokenise numbers cannot effectively represent them”, as well as reminding researchers of the importance of building with exponentially improving compute in mind.

At the end of the workshop, the organisers gave out three best paper awards:

- Best applications paper: Towards Generalizable Multimodal ECG Representation Learning with LLM-extracted Clinical Entities by Mingsheng Cai, Jiuming Jiang, Wenhao Huang, Che Liu, and Rossella Arcucci.

- Best tabular paper: ConTextTab: A Semantics-Aware Tabular In-Context Learner by Marco Spinaci, Marek Polewczyk, Maximilian Schambach, and Sam Thelin.

- Best timeseries paper: CauKer: Classification Time Series Foundation Models Can Be Pretrained on Synthetic Data only by Shifeng Xie, Vasilii Feofanov, Marius Alonso, Ambroise Odonnat, Jianfeng Zhang, and Ievgen Redko.

AI for Math

AI for Math WorkshopThis was the second year of the AI for Math workshop at ICML (summary of the previous ICML AI for math workshop), alongside a similar series of workshops at NeurIPS (NeurIPS 2024 Math-AI workshop coverage).

One recurring theme throughout this workshop was the high-level choice of research direction: does the community want to build systems for fully autonomous mathematical research, or tools to support human reasoning and decision-making?

Some recent work discussed in the workshop included Goedel-prover-v2, a new state-of-the-art open-weights model for proving theorems in Lean, APE-Bench I, a new proof engineering benchmark, and CSLib, a new open-source Lean 4 library for foundational results in computer science, as well as an update on the AI Mathematical Olympiad.

There were two competition tracks in this workshop:

- Track 1, proof engineering (APE-Bench I), was won by Sparsh Tewadia, using Gemini 2.5.

- Track 2, reasoning from physics diagrams (SeePhys), was won by Ruitao Wu, Hao Liang, Bohan Zeng, Junbo Niu, Wentao Zhang, and Bin Dong, using a combination of Gemini 2.5 and OpenAI o3.

There were two best paper awards:

- Does Reinforcement Learning Really Incentivize Reasoning Capacity in LLMs Beyond the Base Model? by Yang Yue, Zhiqi Chen, Rui Lu, Andrew Zhao, Zhaokai Wang, Yang Yue, Shiji Song, and Gao Huang.

- Token Hidden Reward: Steering Exploration-Exploitation in GRPO Training by Wenlong Deng, Yi Ren, Danica J. Sutherland, Christos Thrampoulidis, and Xiaoxiao Li.

The ICML 2025 test of time award (for an impactful paper from ICML 2015) went to Sergey Ioffe and Christian Szegedy for their paper which introduced the Batch Normalization (“batch norm”) procedure, developed while the authors were both at Google.

Batch norm normalises inputs to intermediate neural net layers — i.e., transforms them so each feature has zero mean and unit variance. At train time, normalisation is done on a per-minibatch basis. At inference time, normalisation is done using the population statistics of the training set.

The ICML 2015 Batch Normalization paper

After receiving the award, Sergey Ioffe gave a talk, starting with an explanation of the initial motivation behind the paper: figuring out why ReLUs worked better than sigmoids as activation functions, and what it would take for sigmoids to work well.

Impact

While the paper did end up enabling the more widespread use of sigmoid activation functions1, the impact was more profound: batch norm enabled significantly faster and more efficient training, and was followed by other normalisation procedures including group norm, weight norm, and instance norm, as well as layer norm, which was a key component of the 2017 Transformer architecture.

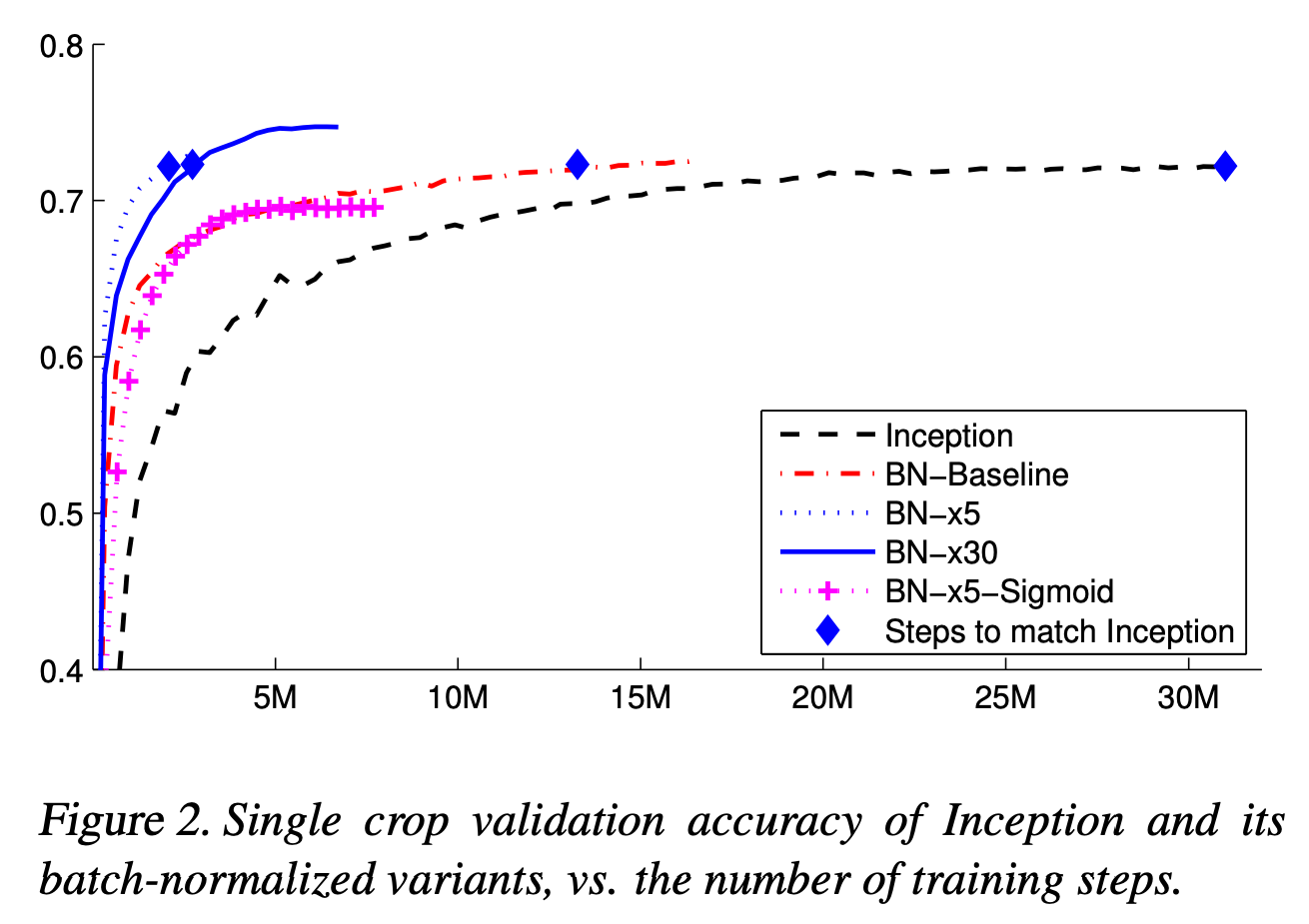

Figure 2 in the batch norm paper shows a 14-fold training speed increase over the baseline Inception model, which took around 30 days to train on a cluster of CPUs. This speedup was achieved by adding batch norm and increasing the learning rate. (the use of a higher learning rate was enabled by the addition of batch norm)

The 14x training efficiency increase from batch norm + higher learning rate

Interpretation

This talk provided an interesting reminder of the empirical nature of deep learning research, as Ioffe described how the current understanding of why batch norm works is very different to the initial explanation of its mechanics.

He mentioned that while the 2015 paper attributed the success of batch norm to a reduction in “internal covariate shift”, later research showed that the improvements are due to a smoothing of the optimisation landscape. He pointed to the 2018 NeurIPS paper How Does Batch Normalization Help Optimization? by Santurkar et al for an explanation of this phenomenon.

Scale Invariance

Ioffe also presented some analysis with implications for scale-invariant models more generally.

Batch norm causes scale-invariance that results in implicit learning-rate scheduling, as the relative magnitude of the gradient gets smaller when training progresses and weights become larger.

This holds for general scale-invariant models, and there is an interaction between normalisation, weight decay, and learning rate scheduling. For more discussion of this phenomenon, Ioffe mentioned Layer Normalization (Ba et al, 2016) and L2 Regularization versus Batch and Weight Normalization (van Laarhoven, 2017).

Interactions

Ioffe ended the talk with a discussion of how, in batch norm, calculating statistics on the minibatch level creates additional interactions between training examples.

He pointed out that, while this can have undesirable side-effects in some cases, some more recent works make use of per-example interactions to improve training (notably SimCLR by Chen et al, 2020 and LongLlama by Tworkowski et al, 2023), and speculated that this could be a key mechanism employed to improve future models.

Footnotes

-

As per the paper: “this enables the sigmoid nonlinearities to more easily stay in their non-saturated regimes, which is crucial for training deep sigmoid networks but has traditionally been hard to accomplish” ↩

There were six Outstanding Paper Award winners this year in the main track, and two in the position paper track.

Outstanding Papers (Main Track)

-

CollabLLM: From Passive Responders to Active Collaborators (Shirley Wu, Michel Galley, Baolin Peng, Hao Cheng, Gavin Li, Yao Dou, Weixin Cai, James Zou, Jure Leskovec, Jianfeng Gao)

-

Train for the Worst, Plan for the Best: Understanding Token Ordering in Masked Diffusions (Jaeyeon Kim, Kulin Shah, Vasilis Kontonis, Sham Kakade, Sitan Chen)

-

Roll the dice & look before you leap: Going beyond the creative limits of next-token prediction (Vaishnavh Nagarajan, Chen Wu, Charles Ding, Aditi Raghunathan)

-

Conformal Prediction as Bayesian Quadrature (Jake Snell, Thomas Griffiths)

-

Score Matching with Missing Data (Josh Givens, Song Liu, Henry Reeve)

-

The Value of Prediction in Identifying the Worst-Off (Unai Fischer Abaigar, Christoph Kern, Juan Perdomo)

Outstanding Papers (Position Paper Track)

-

Position: The AI Conference Peer Review Crisis Demands Author Feedback and Reviewer Rewards (Jaeho Kim, Yunseok Lee, Seulki Lee)

-

Position: AI Safety should prioritize the Future of Work (Sanchaita Hazra, Bodhisattwa Prasad Majumder, Tuhin Chakrabarty)

Test of Time Award

This year’s Test of Time Award goes to Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift by Sergey Ioffe and Christian Szegedy. This paper from 2015 introduced Batch Normalization, a technique to normalise inputs to neural nets in the batch dimension, enabling the use of higher learning rates and faster training. It is a forerunner of Layer Normalization, which performs a similar operation along the feature dimension and was used in the original Transformer paper. The authors of this paper will give a talk at 8:30am Vancouver time on Wednesday.

There were two honorable mentions:

- Trust Region Policy Optimization by John Schulman, Sergey Levine, Pieter Abbeel, Michael Jordan, and Philipp Moritz.

- Variational Inference with Normalizing Flows by Danilo Rezende and Shakir Mohamed.

The Trust Region Policy Optimization paper introduced the TRPO algorithm for reinforcement learning, a precursor to the now-ubiquitous Proximal Policy Optimization (PPO) algorithm.

ICML 2025 is now underway, starting with expo talks and tutorials. The main conference will run Tuesday-Thursday, followed by two days of workshops.

This year our coverage will be remote-only, and less extensive than for previous conferences.

For the in-person attendees, the venue is the Vancouver Convention Center. If you’re feeling a sense of deja vu, it might be because this venue has hosted many past ML conferences, including NeurIPS 2024 just seven months ago.

First floor of the vancouver convention center's west building (December 2024)

The convention center has two buildings: the East building, which is shared with the Pan Pacific Hotel, and the West building, which will host the exhibit hall and invited talks in its basement. An underground walkway links the two buildings. There is an interactive map on the convention center website.