A position paper in the reinforcement learning (RL) oral session made the argument for automatic environment shaping.

Environment shaping is standard practice in RL — a “necessary evil”. Without it, RL doesn’t work at all on some problems. It can take many forms — including reward shaping (changing the reward signal to encourage certain behaviours) and action space shaping (for example, allowing a robot controller to specify desired target positions rather than specifying individual torque values for each joint’s motor).

Environment shaping is usually done using heuristics and domain knowledge by either the creators of an environment, or researchers applying RL techniques to the environment. The authors take the position that automated environment shaping is an important research direction in RL, and proposed code-generation LLMs as a promising approach.

The project page, including a link to the full paper, can be found here.

One of the busiest posters in the morning session was for a paper which asks: what if we do next-token prediction with a simple linear model instead of a transformer?

Our results demonstrate that the power of today’s LLMs can be attributed, to a great extent, to the auto-regressive next-token training scheme, and not necessarily to a particular choice of architecture.

Auto-Regressive Next-Token Predictors are Universal Learners by Eran Malach

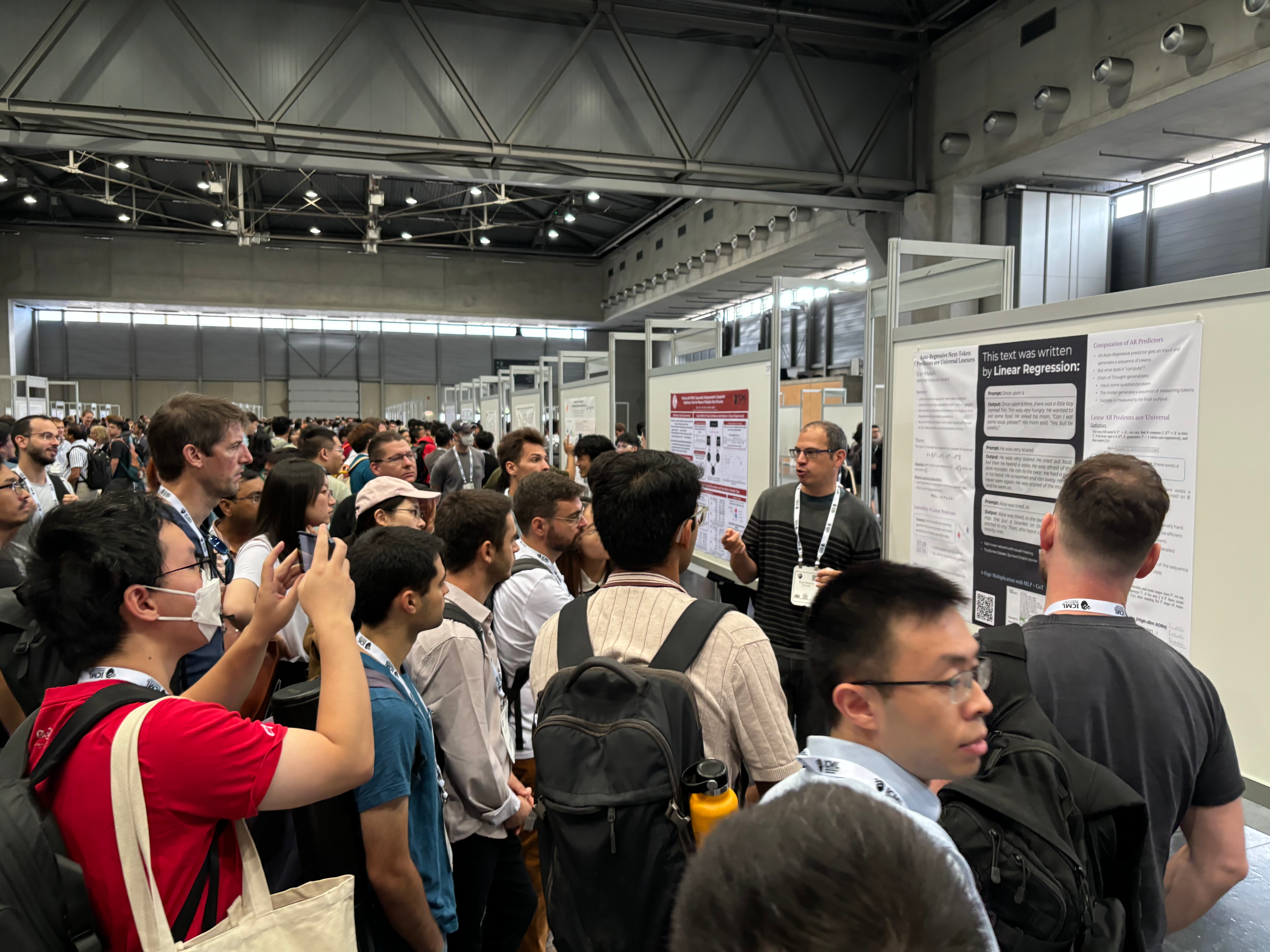

Eran Malach presenting to a crowd

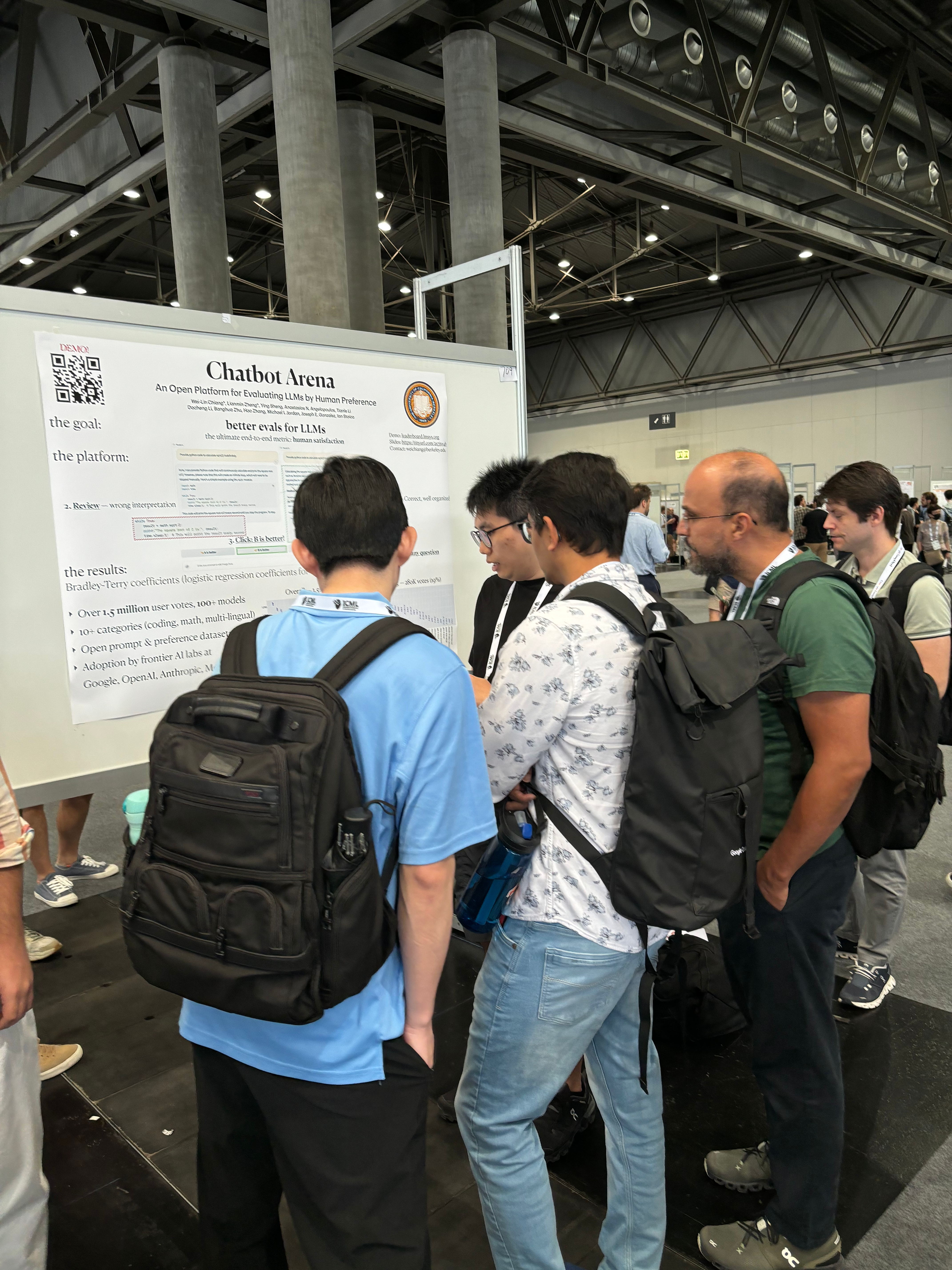

More photos of the poster session below.