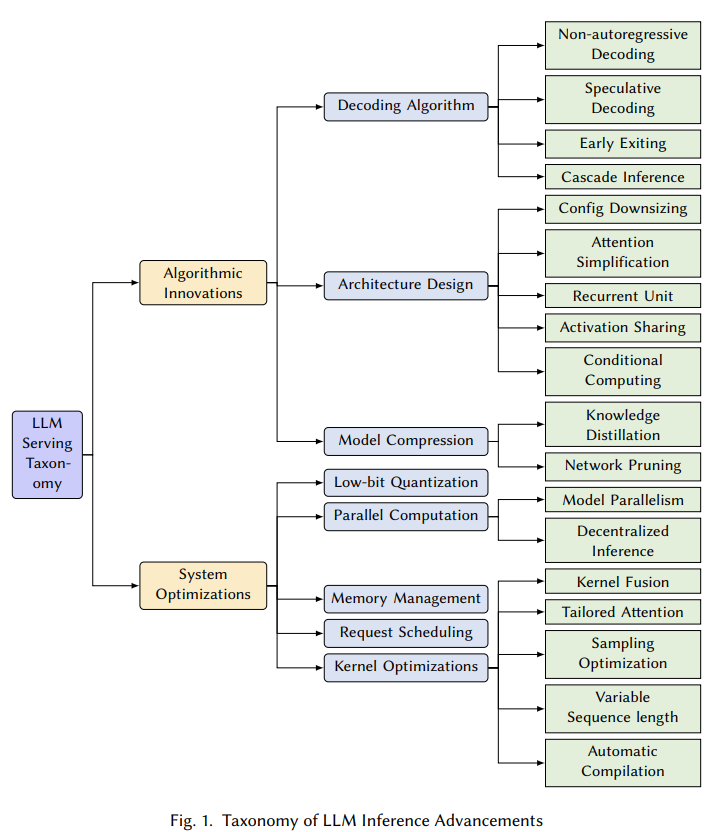

First tutorial of the day (link), looking at efficient LLM serving from the perspective of both algorithmic innovations and system optimisations. Xupeng Miao gave a run-through of various techniques covered in his lab’s December 2023 survey paper, as well as some new ones.

Source: Towards Efficient Generative Large Language Model Serving: A Survey from Algorithms to Systems

It also featured some of their own research, including SpecInfer (tree-based speculative inference) and SpotServe (efficient and reliable LLM serving on pre-emptible cloud instances).