The Test of Time Award is given to an influential paper from 10 years ago.

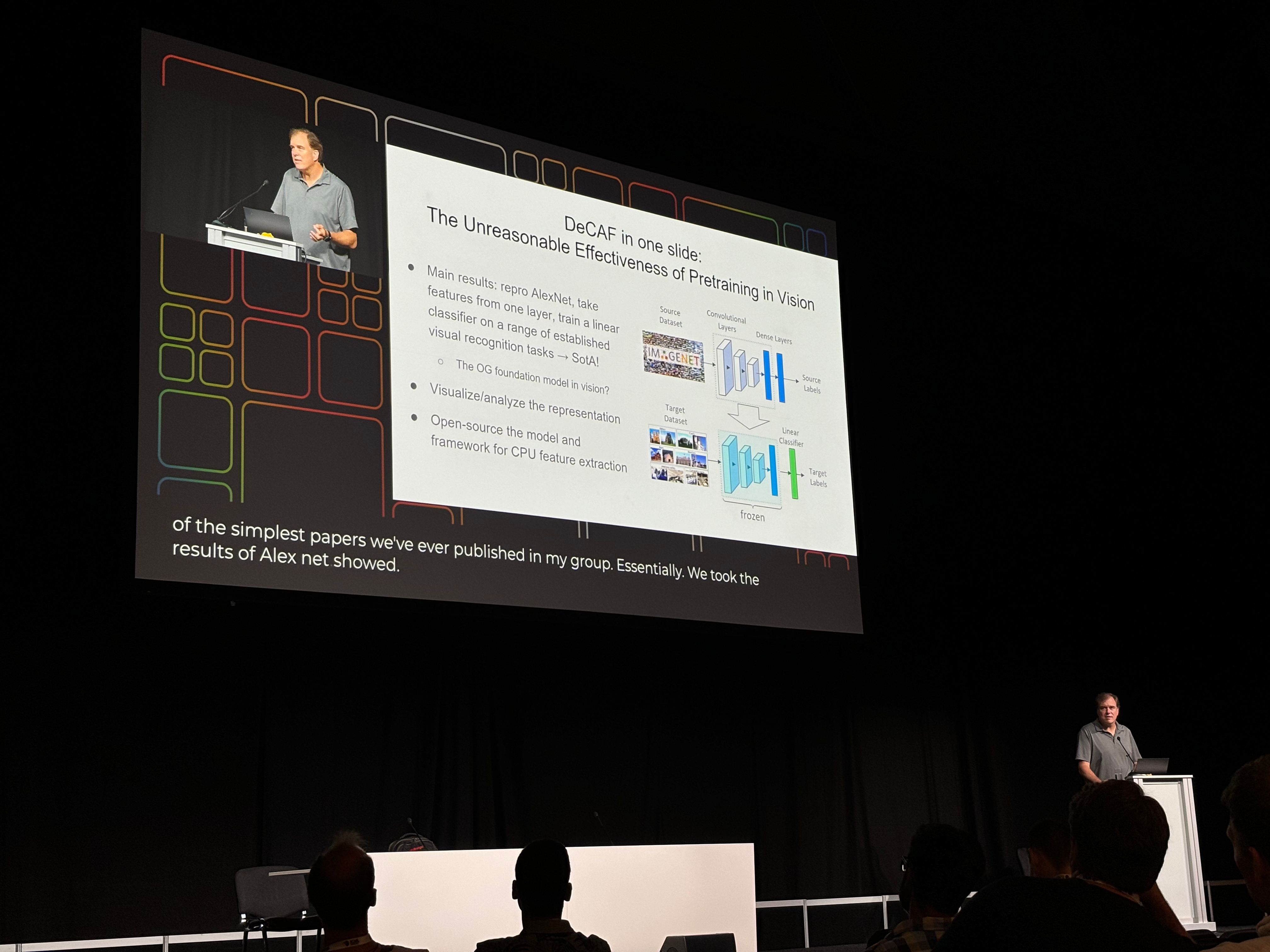

This year’s Test of Time Award was won by ICML 2014’s DeCAF: A Deep Convolutional Activation Feature for Generic Visual Recognition and presented by Trevor Darrell, one of the authors.

Contextualising the paper, Darrell explained that in 2014 deep learning had limited adoption in the computer vision field, and while AlexNet had already shown success in object recognition many in the field were still skeptical about the use of convolutional neural nets (CNNs) for other computer vision tasks.

DeCAF provided more evidence of the promise of CNNs across many tasks, showing huge improvements on the state-of-the-art in domain adaptation benchmarks. It used an approach that we’re now very familiar with: freezing the weights of a pre-trained net (AlexNet), and attaching a smaller set of trainable parameters (in this case, a linear layer) that can be fine-tuned for specific tasks. Also described by Darrell as “the OG foundation model in vision”.

This is analogous to techniques such as LoRA which are used for fine-tuning LLMs today, and is very different to the prevailing paradigm of end-to-end learning from the time DeCAF was published.

Darrell credited the open-source code accompanying the paper with much of the traction it got in the community (alongside Caffe, a deep learning library which was later merged into PyTorch) — another thing that was not the norm at the time.

Another interesting comment was that some at the time felt like deep learning was “only for Google”, and required thousands of CPUs. It was quickly shown — through results like AlexNet and DeCAF — that just a few GPUs could be enough, allowing for increased uptake in deep learning.

Darrell left us by noting the similarity to today’s foundation model pre-training requiring (tens of) thousands of GPUs, and asking whether these might become more accessible over time too.